On Graph Algorithms and ML. Q&A with Amy Hodler and Mark Needham

Q1. You just released a free Ebook called Graph Algorithms: Practical Examples in Apache Spark and Neo4j. Who are the target readers for this book?

AMY: Mark and I wrote this as a practical guide to help developers and data scientists get started integrating graph analytics into their workflows and applications. In many cases, they have some experience using either Apache Spark or Neo4j, however, the book is also helpful to those that need to understand general graph concepts.

Q2. In a nutshell, how do graph analytics vary from conventional statistical analysis?AMY: Real-world networks tend to form highly dense groups with structure and “lumpy” data. We see this clustering of relationships in everything from IT and social networks to economic and transportation systems.Conventional statistical approaches don’t fully utilize the topology of data itself and often “average out” uneven distributions. This does not work well for investigating relationships, inferring group dynamics, or forecasting behavior.

Graph algorithms use mathematical calculations that are specifically built to operate on relationships. They are uniquely suited to understanding structures and revealing patterns in datasets that are highly connected.

Q3. When do you recommend to use graph algorithms and when not?

MARK: In general, highly organized data that follows a regular schema and has relatively few relationships between its data elements does not warrant a graph specific platform. Likewise, questions that are flat (not nested) with very few connections don’t require graph algorithms. For example, regular sales reporting — often based on simple statistical results such as sums, averages, and ratios — can easily be accomplished with traditional methods not requiring relationship analysis.

Alternatively, graph algorithms serve us well when we need to understand structures and relationships to do things like forecast behavior, prescribe actions for dynamic groups, or find predictive components and patterns in our data. These elements are essential to answering questions about the pathways that things (e.g. resources, information, data) move through, how they flow, who influences that flow, and how groups interact with one another.

Q4. Can you give us a simple example to illustrate how graph algorithms deliver value?

AMY: Let’s say we’re trying to combat fraud in online orders. We likely already have profile information or behavioral indicators that would flag fraudulent behavior. However, it can be difficult to differentiate between behaviors that indicate a minor offense, unusual activity, and a fraud ring. This can lead us into a lose-lose choice: Chase all suspicious orders — which is costly and slows business — or let most suspicious activity go by. Moreover, as criminal activity evolves, we could be blind to new patterns that weren’t previously modeled.

Graph algorithms, such as Louvain Modularity, can be used for more advanced community detection to find group interacting at different levels. For example, in a fraud scenario, we may want to correlate tightly knit groups of accounts with a certain threshold of returned products. Or perhaps we want to identify which accounts in each group have the most overall incoming transactions, including indirect paths, using the PageRank algorithm.

To illustrate these algorithms (and to the delight of Game of Thrones fans), below is a screenshot using Louvain and PageRank on season two of Game of Thrones. It finds community groups and the most influential characters using our experimental tool, the Graph Algorithms Playground. Notice how Jon is influential in a weakly connected community but not overall, and that the Daenerys group is isolated.

Q5. Can graph algorithms improve machine learning predictions? If yes, how?

AMY: Improving the accuracy of machine learning predictions is a popular use of graph algorithms. We know that relationships are some of the strongest predictors of behavior. We also know that more information makes our ML models more predictive but that data scientists rarely have all the information they want to train on. Instead of throwing out relationships, Neo4j helps customers incorporate this highly predictive information (that they already have!) to increase the accuracy, precision, and recall of machine learning models.

Neo4j users are using our graph algorithms library for connected feature engineering to create scores that they can extract and use in their machine learning pipelines. In the last chapter of the book, we walk through an example and compare the predictive quality of different models as we add more “graphy” features.

Q6. Why choosing Apache Spark for this book?

MARK: We use Spark and Neo4j to showcase graph algorithms in the book because they both had unique qualities. Spark is a popular scale-out computing framework with libraries to support a variety of data science workflows. Neo4j offers a high-performance graph-native platform with over 35 graph algorithms and the ability to persist graphs.

Spark is an excellent place to explore graphs based on massive datasets and Neo4j is the ideal choice for advanced graph data science and building graph applications. This is why Neo4j and Databricks are bringing the graph query language, Cypher, to Spark 3.0, why Neo4j invests in Spark integrations, and why we include both in the book.

Q7. Can you give us an example on how to create an ML workflow for link prediction combining Neo4j and Spark?

MARK: In link prediction, we are trying to infer new relationships that will form in the near future or estimate unobserved facts missing in our data. There are two methods for making this kind of prediction:

- Run specific algorithms, score the outcomes, and set a threshold value for a positive or negative prediction

- Use these same algorithm scores as features to train a machine learning model.

There are several categories of graph algorithms commonly used for link prediction:

- Community detection algorithms for classifying nodes as part of one group or another

- Centrality algorithms for ranking node influence

- Similarity algorithms to score how alike one node is to another / the most alike notes

- Link Prediction algorithms that measure how structurally close nodes are

In cases where we are combining Neo4j and Spark for machine learning, we want to start our workflow with aggregating data in Spark. We may also want to do some basic exploration to confirm our intended data has relevant relationships. Next, we’ll want to shape and store our data as a graph in Neo4j so we can further dive into the data as a graph, evaluating more or less interesting elements, checking for sparsity and the like.

At this point, we also want to cleanse data by performing tasks such as removing outliers, handling missing information, and normalizing data. Within Neo4j we can use graph algorithms for feature engineering and write back results such as community membership, influence ranking, and link estimation scores.

As a next step, we’ll prepare our data for machine learning by splitting into training and testing datasets and then resampling to make them proportional representations. This could be done in a variety of tools — Python is often a popular choice. We should always remember to check for data leakage (related information in both our training and testing) and consider time-based splitting for graph data. Then we’ll extract the features we created in Neo4j and train our model in Spark, likely using tools in Spark’s machine learning library, MLlib, and perhaps something like a random forest to classify whether there will be a link or not.

After this, we need to evaluate our results (maybe with a subject matter expert), comparing different models for different goals such as reducing false positives vs. global accuracy. We might plot and evaluate results directly or use a separate visualization tool. I should also mention that this process is iterative and cycles back on itself at many points as we try different models and test different features and algorithms. Finally, once we are happy with our ML model, we’ll then want to operationalize it. That could take many forms, including creating a graph-based application to match new patterns to our new predictive models.

We go into a similar workflow in detail in our chapter, “Using Graph Algorithms to Enhance Machine Learning.” Below is a visual of a general workflow that Amy and I presented in a recent talk but you could use this as a template for various technologies.

About Amy Hodler

Amy is a network science devotee, AI and Graph Analytics Program Manager at Neo4j, and a co-author of the O’Reilly book, “Graph Algorithms: Practical Examples in Apache Spark and Neo4j.” She promotes the use of graph analytics to reveal structures within real-world networks and predict dynamic behavior. Amy helps teams apply novel approaches to generate new opportunities at companies such as EDS, Microsoft, Hewlett-Packard (HP), Hitachi IoT, and Cray Inc. Amy has a love for science and art with a fascination for complexity studies and graph theory.

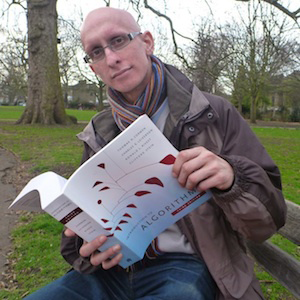

About Mark Needham

Mark Needham is a graph advocate and developer relations engineer at Neo4j. He works to help users embrace graphs and Neo4j, building sophisticated solutions to challenging data problems. Mark has deep expertise in graph data, having previously helped to build Neo4j’s Causal Clustering system. He writes about his experiences of being a graphista on his popular blog at https://markhneedham.com/blog/ and tweets @markhneedham.

Resources

Free Ebook

Graph Algorithms: Practical Examples in Apache Spark and Neo4j

By Mark Needham & Amy E. Hodler