Semantic Analysis: When Computers will Understand us

BY Tetyana Loskutova

This essay aims to shed light on the issues of context in computerised semantic analysis. Semantic analysis is the field of study concerned with meaning. Meaning is routinely encoded by humans in speech, signs, music, and other kinds of artificially constructed data. Among these, written text is one of the most universal mediums and, as such, chosen for the present discussion. Encoding of real-world phenomena in text is associated with some loss of meaning, which can often be restored given the appropriate context. However, it can be argued that encoding also allows for new meanings to emerge. For example, poetry excites the imagination of the reader often resulting in imagery unknown to the author. Is it a misunderstanding or, on the contrary, a deeper understanding? What about Artificial Intelligence? Are computers bound to extracting the most literal and logical meaning from text or is there a possibility for novel yet valid interpretations?

Is the lack of understanding that computers exhibit of human speech fundamentally different from the misunderstanding that humans are prone to?:

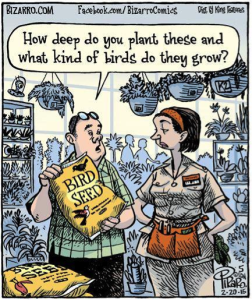

The image above illustrates the issue of context in semantics: the buyer in the image understands the local context of the speech situation, that is a gardening shop where people buy plants for their garden; but he misunderstands a wider context of a garden as a place for interacting with nature where birds may be attracted by food to complement the experience of the gardener. Analogous context interpretation mistakes are common in the computer-based processing of text.

In fact, computers have not yet been given the authority to define the context independently. Despite significant advances in text classification, translation, and text generation, most methods depend on the prior identification of the higher-level context by humans. This pre-classification is done by training classifiers on labelled data or a particular corpus and by limiting the context of the problem to a specific topic, such as sentiment analysis. But the limits can be also restrictive: a sentiment analysis would confidently classify “I am so happy!” but it won’t recognise if the expression was genuine or sarcastic.

Consider this sentence from Time magazine’s article about the origins of the Easter [1]:

Rabbits, known for their energetic breeding, have traditionally symbolized fertility.

Using pre-trained classifiers in Mathematica, the sentence can be classified without any additional information:

In[]: Classify[“FacebookTopic”, “Rabbits, known for their energetic breeding, have traditionally symbolized fertility”]

Out[]: PetsAndAnimals

“FacebookTopic” refers to the data used to train the classifier. The answer seems correct at the first glance, however, reading the full article would reveal that the text has nothing to do with the discussion of animals or pets and is concerned primarily with the history of the Easter holiday. Just like the buyer in the garden shop, the classification algorithm (and the coder!) in this case makes the mistake of not considering a more general yet related context somewhere on the level between “Rabbits” and “Facebook”. The solution that seems apparent is to consider the neighbouring sentences. However, in this particular text, even taking two full surrounding paragraphs still returns “PetsAndAnimals” as the classification label. What can be done to improve the scoping ability of the classifier, to broaden its mind and make it “think” out-of-the box?

One possible answer is link-based classification. Link-based classification is loosely related to structuralist theories of meaning whose main idea is that the meaning of any word is constructed by a superposition of all related words within a language [3]. Since it is questionable that the meaning resides in discrete words [3], the linking idea can be extended to phrases, sentences, or paragraphs. In this case, the meaning of a paragraph is constituted by the totality of relationships of the paragraph to the other words within the language. Leveraging from this idea, the link-based classification algorithm works by grouping paragraphs based on the linkages between the words within these paragraphs. The basic intuition of this method is that two paragraphs are more likely to belong to the same category if they contain links to one another or if they are both linked to a separate common paragraph or a group of words; conversely, texts with no such links are likely to represent different categories.

The difficulty of the link-based method is in defining and finding these links. Semantic theory discusses many levels of word linkages:grammatical, lexical, and syntactical among others. Potentially, all of these levels can usefully contribute to classification. However, automated extraction of these links is rather challenging. The solution can be found in relaying this task to already existing and frequently updated sources of links, such as Wikipedia. For example, coming back to the example from the Easter history article, the subject of the sentence is “Rabbits”. A search on Wikipedia for “rabbits” returns an article about “Rabbit” [4] written in Wikicode, which contains a number of links to the other articles in the Wikipedia, including the articles titled “Easter” and “Easter Bunny”. This implies that such a link-based search has the potential to broaden the classifier’s “mind” by including the concepts of Easter and Easter Bunny in its classification context.

Of course, at this stage, the classifier is still unlikely to associate the article with Easter because the reference to the Wikipedia article will bring in a collection of other links as well. However, the further inclusion of the words (“Eostre”, “eggs”, “Easter”) from the two neighbouring sentences can enhance the predictive capacity of the classifier.

The most important feature of this approach is that linking to Wikipedia can “teach” the classifier about the existence of potentially more suitable labels for classification of the sentence, that is, labels that were not given to the classifier by the coder.

The general steps in the link-based classification approach are derived from the previous work on labelled and unlabelled link-based classification [5, 6]:

- A classifier model is built using a training set with labelled data. This is called local classifier.

- An unlabelled text is assigned labels based on the local classifier.

- Links between texts with labels are calculated and an optimization algorithm is used to re-assign the labels in such a manner that the most connected clusters of text have the minimum diversity of labels – this is called the relational classifier. The results of the relational classifier are used to adjust the parameters of the local classifier.

- Steps 2,3 are then repeated until some criterion is met, such as the number of iterations, or the optimization convergence, or the target accuracy of the model.

- In the cases when the convergence or the desired accuracy is not achieved a new label should be generated.

The advantages of the approach:

- dynamic labelling;

- capturing of both the linguistic “sense” [3, p. 5] of text and its referentially defined position in the corpus;

- knowledge about the proximity of different labels, which can be used to aggregate labels into taxonomic and thematic categories.

The disadvantages are:

- dependence on other methods for initial labelling;

- The difficulty with reference extraction from the natural language.

This approach is still in its early development stages. It has been tested on semi-structured text, such as html [6] and Wikicode [7]. The application to unstructured text should be the next step.

The development of this approach can potentially bring interesting results. Firstly, computers may be able to grasp the meaning that humans are not likely to grasp because of the deep human embeddedness in the context of human life. Can this computer-derived meaning be still useful for humans? It can be. For example, today, humans are using computer-based statistical approaches to gain better insight into the future. Secondly, visualising the training steps of the link-based algorithm can provide valuable insights into the learning process, such as the critical “turning points” in learning that result in completely different conclusions. Can this help us understand the progression of human knowledge? It is conceivable as well.

References

[1] http://time.com/3767518/easter-bunny-origins-history/, retrieved 30 July 2017

[2] Wolfram developer platform. New notebook https://develop.open.wolframcloud.com/app/view/newNotebook?ext=nb\

[3] Goddard, C. (2011). Semantic analysis: A practical introduction. Oxford University Press, Oxford, https://books.google.co.za/books?id=XW4WL3mKjjkC&printsec=frontcover#v=onepage&q&f=false, retrieved 30 July 2017

[4] “Rabbit”, Wikipedia, https://en.wikipedia.org/wiki/Rabbit, retrieved 30 July 2017

[5] Lu, Q., & Getoor, L. (2003). Link-based classification. In Proceedings of the 20th International Conference on Machine Learning (ICML-03) (pp. 496-503).

[6] Lu, Q., & Getoor, L. (2003). Link-based classification using labeled and unlabeled data. In ICML 2003 workshop on The Continuum from Labeled to Unlabeled Data in Machine Learning and Data Mining.

[7] Loskutova, T. Combining content and link-based classifiers to analyze Wikipedia. http://community.wolfram.com/groups/-/m/t/1136795?p_p_auth=1BIuWq6S, retrieved retrieved 30 July