Video copyright: Sesame Street

tl;dr The clip above is a dashboard recording that demonstrates real-time video analysis in Redis. Its code is at https://github.com/RedisGears/EdgeRealtimeVideoAnalytics and you can scroll down for more details.

During the last couple of months, we at Redis Labs made several exciting announcements, including RedisGears (a dynamic execution framework), RedisAI (for deep learning model execution) and RedisTimeSeries (a time series database). These, on top of Redis v5 and the new Redis Streams data structure, together construe what we’ve dubbed RedisEdge. purpose-built, multi-model database for the Internet of Things (IoT) edge

The premise behind RedisEdge is that it makes it simple to develop, deploy and run complex data processing tasks right at the edge of the Internet of Things, as near as possible to the actual things themselves. Sensors of any possible kind can report their data to the database and it not only stores but is able to process them for further consumption and/or shipping. We started out with a proof of concept to ensure we had both the cold hard numbers to back up this promise, and a nice user interface to visualize everything.

Any proof of concept needs a use case, and we went with counting people in a live video stream. Possible business applications of this technology could be enabling per-use shared workspace pricing or capacity management for public events, among a multitude of others. To do the heavy lifting of detecting objects (i.e., people) in video frames, we used a modern machine learning model called YOLO or “You Only Look Once.” The object detection system is well-known for its comparatively good performance and accuracy, so all that was left to do was “just” to hook it up into RedisEdge (more on this subject in “RedisAI: Thor’s Stormbreaker for Deep Learning Deployment”).

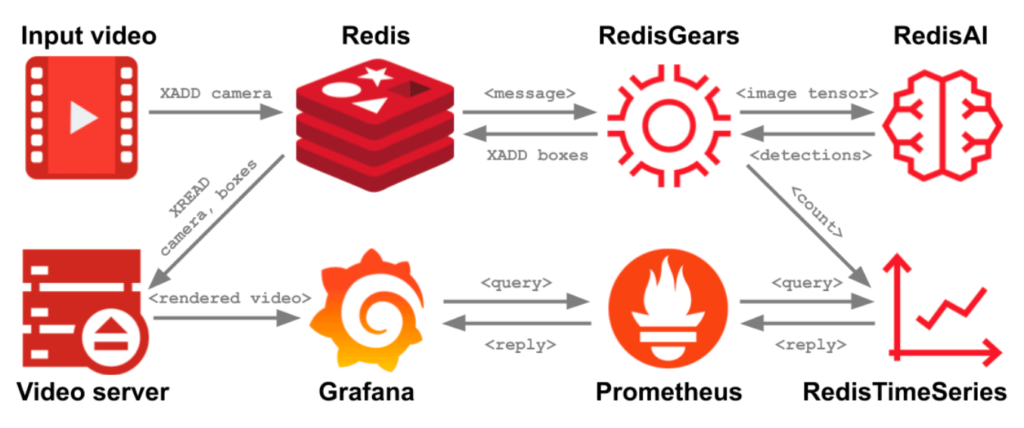

The following diagram depicts the result:

Our RedisEdge use case: step-by-step

- The driver of this setup is a video capture process (top-left corner), which takes an input video stream from a camera or a file, and extracts its constituent frames. The capture process connects with the standard redis-py client to the RedisEdge server and appends each captured frame as a new message to a Redis Stream with a call to `XADD`. This message consists of the frame’s number (for debugging) and the frame’s raw encoded-as-JPEG byte representation.

- The addition of a message to the input Redis Stream triggers the execution of a RedisGears script. The script implements a pipeline of operations and is conceptually made up of these three main parts: downsampling the input stream’s frame rate, running the model on a frame and storing the detections and various metrics.

- The first part, downsampling, maintains performance via a throttling mechanism implemented in Python. Optimally, we’d like to process all frames as fast as they come in, in order to provide accurate, timely results. However, processing even a single frame takes time, and when the input frame rate is higher than the processing rate, we end up with a backlog that eventually explodes. So, to avoid blowing up in glorious flames, we sacrifice completeness and downsample the input frame rate by dropping some of the input frames, leaving only a part of the frames to be processed. The measured processing frame rate dynamically determines just how big these “parts” and “some” samples should be.

- In the script’s second part, frames that weren’t dropped by the downsampling filter are run through the YOLO model. Before this can happen, the frame needs to be decoded from its JPEG form, resized and normalized. All these tasks are achieved, again, via the use of conventional Python code and the usual assortment of standard libraries for numeric arrays (numpy), images (pillow) and computer vision (opencv).

The processed frame is then converted into a RedisAI tensor. All calls to RedisAI from RedisGears use a direct API to achieve optimal performance, as opposed to communication via Redis’ keyspace, and the tensor is directly fed to a TensorFlow YOLO model. The model is executed and, in turn, returns a reply tensor that contains object detections from the frame. The model’s reply is then further processed with a RedisAI PyTorch script that performs intersection and non-maxima suppression on the detected bounding boxes. Then, the boxes are filtered by their label to exclude all non-people detections and translate their coordinates back to the frame’s original dimensions.

The gear’s final part stores the model’s processed output in another Redis Stream. Messages in the output Redis Stream consist of a reference to the frame’s ID from the original input stream, the number of people detected and a list of their respective bounding boxes. By reading the input and output Redis Streams, a video server can render an image that is composed of the input frame and the people detected in it. In addition to populating the output stream, the gear also stores the number of people counted, as well as various performance metrics (e.g., frame rates and execution times for different steps) as RedisTimeSeries data. This provides a simple way to externalize and integrate the pipeline’s output with any third-party reporting/dashboarding/monitoring application and/or service, such as Grafana in this example.

That’s basically it – data goes in, gets processed, is stored and sometimes served back – just like many other systems.

So what makes RedisEdge so special?

I’m a Redis geek. For me, Redis is my stack and where I feel at home. More personal trivia include Transylvanian origins and strong ambivalence for numbers.

When people ask me why is Redis special, I always say: “because it is fast and fun.” RedisEdge is fast because, well duh, it is Redis and you can count on it for performance. Truism: Having all data stored in purpose-built data structures in memory is the best thing for performance.

Of course, the hardware also plays a big role when it comes to performance. This is even truer when executing deep learning models. YOLO – while modern and performant – still requires a decent GPU to keep up with an HD 60fps video stream without dropping a frame. On the other hand, a decent RedisEdge CPU can handle a standard 30fps webcam with no more than a 5% drop rate. So, given the right hardware and input, it can be fast. Very.

And is RedisEdge fun? Hell yeah! If anything, scroll back up and look at the pretty boxes and graphs. That’s satisfaction in its purest form, and the best part is that it’s totally repeatable and modifiable. Because everything is achieved with basic scripting, I could, for example, with minimal changes make it count bats. Or maybe something more practical, like cars. Or I could replace YOLO with mobilenet – a different model that recognizes animals – but that’s already been done (see: https://github.com/RedisGears/AnimalRecognitionDemo).

Feel free to email or tweet at me with other use case ideas – I’m highly-available 🙂

Sponsored by Redis Labs