Drowning in Data? A Data Lake May Be Your Lifesaver

Q&A with HPCC Systems on how data lakes let you spend less time managing data and more time analyzing it

In today’s digital world, data is king. Organizations that can capture, store, format, and analyze data and apply the business intelligence gained through that analysis to their products or services can enjoy significant competitive advantages.

But the amount of data companies must manage is growing at a staggering rate. Research analyst firm Statista forecasts global data creation will hit 180 zettabytes by 2025. Such growth makes it difficult for many enterprises to leverage big data; they end up spending valuable time and resources just trying to manage data and less time analyzing it. A 2019 survey by McKinsey on global data transformation revealed that 30 percent of total time spent by enterprise IT teams was spent on non-value-added tasks related to poor data quality and availability. Data management problems can also lead to data silos; disparate collections of databases that don’t communicate with each other, leading to flawed analysis based on incomplete or incorrect datasets.

One way to address this is to implement a data lake: a large and complex database of diverse datasets all stored in their original format. The data lake can then refine, enrich, index, and analyze that data. All of this happens within the data lake environment to produce consistent results and remove the possibility of data silos.

SoureForge recently connected with Arjuna Chala, associate vice president at HPCC Systems, where he is responsible for evangelizing the HPCC Systems data lake platform. Arjuna has a long history of helping customers use data analytics to innovate in the healthcare, fintech, cryptocurrency, and smart device industries, and he’s been instrumental in helping HPCC Systems gain adoption among enterprises in Brazil, China, India, the U.S., and various countries in Europe.

In our discussion, we cover the genesis of the HPCC Systems data lake platform and what makes it different from other big data solutions currently available.

Arjuna Chala, associate vice president, HPCC Systems

For those not familiar with the HPCC Systems data lake platform, can you describe your organization and the development history behind HPCC Systems?

Sure. The technology that became HPCC Systems began its existence in 1999 at a company called Seisint. They were interested in creating a data platform capable of managing a sizable number of datasets. Seisint’s work led to the development of Enterprise Control Language, or ECL, a programming language that HPCC Systems uses to this day. In 2004, LexisNexis Risk Solutions acquired Seisint and began using HPCC Systems internally. Other acquisitions followed, and relevant data management and analysis technologies obtained through those acquisitions were integrated into the HPCC Systems stack. This led to LexisNexus releasing HPCC Systems under an open-source license, which made this powerful platform available to a global customer and developer community. Our initial successes with HPCC Systems were in academia, but we’re now seeing HPCC Systems adopted by enterprises in a range of markets.

What are the big differentiators between HPCC Systems and other big data tools? For example, how does HPCC Systems compare to Spark?

Spark is indeed a popular big data tool. Spark and HPCC were developed using different approaches to their design. Spark was built to be part of a larger big data solution and on its own cannot serve as a complete data lake platform. Enterprises using Spark for a data lake implementation need to source and integrate additional software for tools that support user management, data storage and delivery, execution control, and administration. HPCC Systems was built from the ground up to provide not just data delivery and analysis to users; it also supports data ingestion, processing, formatting, analysis, and reporting. Furthermore, we’ve developed data encryption and governance solutions for HPCC Systems to help secure data, ensure it is only accessed by appropriate personnel, and to create audit trails to ensure data security SLAs and regulations are met. It truly is an all-in-one data lake solution.

HPCC Systems and Spark also differ in that they work with distinct parts of the big data pipeline. Spark is more focused on data science, ingestion, and ETL, while HPCC Systems focuses on ETL and data delivery and governance.

Having said this, it’s worth mentioning that using HPCC Systems or another big data tool like Spark is not a one or the other-type proposition. Spark data clusters can and do run in an HPCC Systems’ data lake, and in some cases a mixed environment will be the best implementation for customers, particularly if they already have working Spark clusters that they need to keep operating.

You describe HPCC Systems as a complete data lake platform. Can you get more granular? What exactly does an HPCC Systems platform consist of?

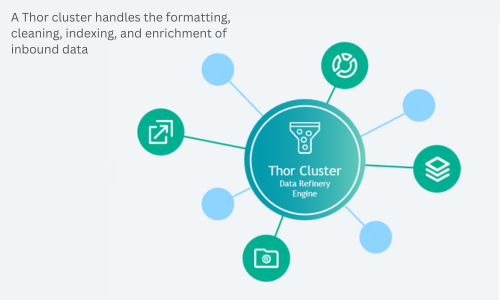

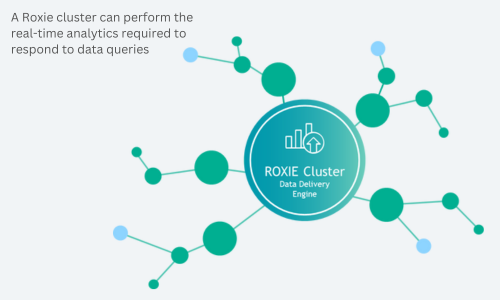

HPCC Systems consists of three primary components: the ECL programming language, a bulk data processing cluster called Thor that cleans, standardizes, and indexes data, and a real-time API/query cluster called Roxie to handle data queries.

Tell me more about ECL. It’s not a widely-know programming language like Java, Python, or SQL.

The big difference is that ECL was designed specifically to be a data-oriented end programming language. This means that high-level data primitives like JOIN, TRANSFORM, PROJECT, SORT, DISTRIBUTE, MAP, and NORMALIZE are first-class functions, so common data operations can be written with just one line of code. ECL is also a declarative programming language, rather than an imperative language like Java. In essence, ECL lets a developer tell the data lake what it wants but doesn’t require that the developer instruct the platform how to go about getting what was requested. This results in a more efficient coding experience; a search query written in ECL may take less than a hundred lines of code, while the same query in Java could require thousands. Better still, as ECL is used across the entire HPCC Systems environment, an HPCC Systems data lake generally requires fewer programmers to build and maintain it, since there’s no need for IT staff with expertise in different languages.

ECL sounds compelling, but it is a new programming language and has fewer users than languages like Python or SQL. What is HPCC Systems doing to help address the learning curve associated with using ECL?

While it’s true that ECL has a small but rapidly growing user community (we estimate there are 2,000 ECL developers working today), I wouldn’t describe the language as new. It’s a tried-and-true programming language that’s been in use since HPCC Systems’ creation back in the late nineties.

We’ve worked hard to ensure that customers have access to a range of training options for ECL. We off training in different formats, from online tutorials to multi-day, hands-on, in-person workshops. offered in a variety of formats including online and in-person as well as multiple day workshops. Most online training is available on demand and most courses are available for free to the open source and academic communities. And since HPCC Systems was released under an open-source license, the global community of ECL users and HPCC Systems administrators can scale to address training and support requests that are beyond the capabilities of proprietary solutions.

And what about the Thor and Roxie clusters? What value do they provide to the overall HPCC Systems platform?

As the database server in an HPCC Systems solution, a Thor cluster’s job is to import and process data at scale. To achieve this, Thor supports parallel processing and data partitioning. Let me give a real world example of how these features impact HPCC Systems’ performance. Say you have a new dataset that lists customers by name and address, and you have an existing dataset in your data lake that has the date and dollar amount of their most recent purchase with you. You can import the new dataset into Thor, write code to resolve the names and addresses and then link it to the dataset with the purchase history to create a file that contains both datasets (name and address and purchase history). Even if these datasets included billions of data entries, Thor’s parallel processing and data partitioning will deliver the merged file in minutes. Other big data solutions could take hours to produce the same result.

A Roxie server cluster is optimized to handle data queries in real time. While Thor works well handling bulk data transformation requests, it’s not designed to provide results in real time. And since users don’t want to sit around for hours waiting for their query results, Roxie’s workflow uses a server/agent design to provide sub-second response times to a user’s data queries. When Roxie receives a query, it first identifies which Thor cluster or clusters can best fulfill the request. Roxie then sends messages requesting the data from all the clusters involved. Roxie then consolidates that data and presents the results.

Both Thor and Roxie are programmed using ECL, so no matter what stage of the data pipeline an HPCC Systems administrator is working in, there’s only need to learn one language. A data lake that doesn’t use an all-inclusive platform like HPCC Systems could potentially require support for more than two programming languages, making the management of the data lake more complicated.

We’ve covered the components and capabilities of HPCC Systems, but what does all of this mean to an IT team considering using the platform? What practical benefits does HPCC Systems provide users?

I think the big value of HPCC Systems beyond its features and performance is its simplicity. It’s a complete solution. IT teams don’t need to vet and purchase the different parts of a data lake from multiple vendors, and then integrate those disparate solutions into one platform. That kind of integration work could take weeks of work to get an active data lake up and running, while the same result can be obtained with an HPCC Systems-based data lake in just a few hours.

ECL also adds to the simplicity of the HCC user experience. Administrators at any point in the data workflow only need to know how to code in ECL, and as the language was specifically developed for data management and query applications, it’s a much more efficient language in terms of code length and complexity.

And as HPCC Systems is open source, users get the benefit of a global development community that can scale to address nearly any need. If a particular application or feature is needed, there’s nothing to stop a user or users from developing it. Proprietary data lake solutions can leave users exposed to higher costs (paying for seat licenses or access to software libraries, for example) or even worse, without the ability to source an application or address a technical issue at all if it’s not something the solution’s owner wants to commit resources to developing or fixing.

What does HPCC Systems have planned for the future of the platform?

We’re developing a cloud-based implementation of HPCC Systems. Cloud-based datacenters are very popular with enterprises, as they allow for more granular control over the compute and storage resources an enterprise is using at any given moment. This helps IT teams avoid capex pitfalls like hardware overprovisioning and overstaffing. That said, data in transit is naturally more at risk of being hacked or stolen. So, we’re working hard to ensure HPCC Systems for the cloud supports hardened security, end-to-end encryption, authentication, authorization, and other important security measures. The goal is to deliver state-of-the-art security features that protect customer data in the cloud, while still providing the excellent data management and analysis performance we’ve seen in on-premises deployments of HPCC Systems.

Where should readers go to learn more about HPCC Systems?

Please visit us at www.hpccsytems.com. The site contains all kinds of documentation about how HPCC Systems works and a host of other resources, including a wiki, case studies, whitepapers, and training options (online, video, and in-person), as well as our community portal where interested parties can connect with members of our growing online community of HPCC Systems users and developers.

…………………………………………………………………………………………………………………………………………………….

Save the Date! Please join us October 2-5, 2023 for the annual HPCC Systems open source community Technology Summit.

We are excited to announce the 10th annual HPCC Systems Community Summit will once again be held virtually this October! This year’s event is free to attend and open to all users of HPCC Systems throughout RELX and the broader open source community. This worldwide event will return to offer plenary and breakout sessions covering a wide variety of topics, a high quality virtual workshop, as well as presentations and technical posters from students working on HPCC Systems related projects. The purpose of the Summit is to gather engineers, data scientists and technology professionals to share knowledge and future roadmap plans for the HPCC Systems platform. This event is dedicated to showcase our community and have industry and academia present their HPCC Systems use cases, research projects and share their experience on how they leverage the HPCC Systems platform. https://hpccsystems.com/community/events/hpcc-systems-summit-2023/