Enterprise Information Extraction

Enterprise Information Extraction

BY Yunyao Li, Research Manager, Scalable Natural Language Processing (SNaP) Group, IBM Research – Almaden

Information extraction (IE) refers to the task of extracting structured information from unstructured or semi-structured machine-readable documents. It has been a well-known task in the Natural Language Processing community for a few decades. Recent rise of Big Data analytics has led to reignited interest in IE, a foundational technology for a wide range of emerging enterprise applications. This article explains the role of IE in Big Data analytics, the practical challenges posed by enterprise applications, and share our experience and lesson learned on building an enterprise information extraction system.

Big Data Analytics: Case Studies

We first introduces two emerging enterprise applications made possible by enterprise IE.

Data-Driven CRM. The ubiquity of user-created content, particularly those on social media, has opened up new possibilities for a wide range of CRM applications. IE over such content, in combination of internal enterprise data (such as product catalog and customer call log), enables enterprises to have a deep understanding of their customers to an extent never possible before. Besides demographic information of their individual customers, IE can extract important information from user-created content and allows enterprises to build detailed profiles for their customers, such as their opinions towards a brand/product/service, their product interests (e.g. “Buying a new car tomorrow!” indicating the intent to buy car), important life events happened to them (e.g. “I am happy to say that I am now engaged’‘ indicts an engagement event), their social relationship (e.g. “Cannot wait to watch Star Wars with my son tonight” implies a parent-son relationship), among many other things. Such comprehensive customer profiles allow the enterprise to manage customer relationship tailed to different demographic at fine granularity (e.g. comic fan in Middle West) or even to individual customers. For example, a credit card company can offer special incentives to customers who have indicated plans to travel abroad in the near future and encourage them to use credit cards offered by the company while oversea.

Machine Data Analytics. Modern enterprise software are multi-tier applications consisting of multiple inter-connected components, where each component continuously produces its own system log data. As a result, the tradition log analysis practices of manually reviewing log files on weekly or daily basis is far from adequate, as log data is generated at a rate and volume beyond what human can easily analyze. Using IE over the machine-generated log data, it is possible to automatically extract individual pieces of information from each log record and piece them together into information about individual application sessions. Such session information permits advanced analytics over machine data such as root cause analysis and machine failure prediction.

Big Data Analytics: Requirements for IE

Besides the traditional requirement for accuracy, emerging big data analytics applications such as the ones mentioned above pose several new challenges to enterprise-scale IE systems.

Scalability. Enterprise IE needs to have the ability to scale with large datasets. For instance, on Twitter alone, there are on average 500 million new tweets created per day; SEC/FDIC filings contain tens of millions of large documents; a medium-size data center produces tens of terabytes of system logs on daily basis; not to mention other types of user-created content, public data, and proprietary data such as call-center logs.

Expressivity. Enterprise IE need to have the ability to scale with the complexity of extraction tasks. Different enterprise applications require different extraction tasks, varying from traditional natural language processing tasks such as entity extraction and sentiment analysis, to more practical challenges such as PDF processing and table extraction. Document formats can vary greatly as well, from plain text such as blogs to semi-structured data such as HTML files converted from PDF files. The length of the documents can also vary, from short documents such as tweets that are shorter than 140 characters to long documents such as SEC/FDIC financial filings with hundreds of pages. The input documents typically contain various degrees of noise, from manually created errors such as typos, grammatical errors, and acronyms (e.g. in tweets or call-log transcripts) to systematic errors such as those resulted from PDF conversion.

Transparency. Enterprise IE need to support transparency and enable the ease of comprehension, debugging and enhancement so that IE development does not become the bottleneck of building aforementioned enterprise application. To speed up the development cycle and lower the barrier to entry, instead of requiring hundreds or thousands lines of code for building extractions, it is much more desirable to build IE programs with high-level declarative languages such as AQL or easy-to-use visual languages. Tools are also needed to enable debugging and maintenance of the IE programs. Furthermore, as the underlying data or requirements of the applications changes, existing IE programs should be easily enhanced and/or adapted to such changes.

SystemT for Enterprise IE

SystemT started as a research project in IBM Research – Almaden in 2006. It has been designed to satisfy the above requirements, by leveraging our experience with databases, natural language processing, machine learning and human-computer interaction.

On the high level, SystemT consists of the following two major parts:

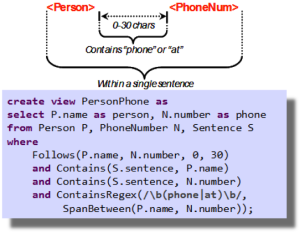

Development Environment. The development environment provides facilities for users to construct and refine information extraction programs (i.e. annotators or extractors). The extractors are specified in a declarative language called AQL (Annotation Query Language). The low-level primitives in SystemT include basic operations such as regular expression/dictionaries, as well as more complex linguistic features such as part-of-speech tags.

As shown in the example statement below, the syntax of AQL is very similar to SQL.

The familiarity of many software developers with SQL helps them in learning and using AQL. An IE developer can learn to write extractors in AQL to take full advantage of the language and develop the extractors in a professional IDE [2, 3, 5]. She can also choose to not to learn AQL at all and instead construct and refine extractors indirectly with AQL through a visual programing environment such as VINERy [6] using a visual language, which is then automatically translated into AQL.

The development environment also provides facilities for compiling the extractors into an algebra expression and for visualizing the results of executing the extractors over a corpus of representative documents. Once a user is satisfied with the results that her extractors produce, she can publish her extractors by feeding them into the Optimizer, which compiles them into an optimized algebraic expression.

Runtime Environment. Given a compiled extractor, the Runtime Environment (“Runtime” for short) instantiates and executes the corresponding physical operators. It is usually embedded inside the processing pipeline of a larger application. The Runtime has a document-a-time executive model: It receives a continuous streams of documents, annotates each document and output the annotations for further application-specific processing. The source of the document stream depends on the overall applications.

The Optimizer and Runtime components of SystemT are based on an operator algebra that we have specifically developed for information extraction [8].

SystemT: Advantages

Let’s revisit the requirements discussed above and discuss how SystemT helps address these requirements:

Scalability. The SystemT Optimizer produces optimized execution plans using both rewrite-based and cost-based optimization techniques. The SystemT Runtime is designed to execute the extraction operation efficiently with low memory footprint. The combination of both ensures the high-performance execution of the extractors over individual documents. For instance, for the named-entity recognition task, the throughput of SystemT is at least an order of magnitude higher than that of the state-of-the-art systems with much less memory consumption. In addition, the SystemT Runtime is a small and compact Java program that is highly embeddable, allowing it to exploit the full power of a large cluster via Hadoop or Spark.

Expressivity. AQL enables developers to write extractors in a compact manner. A few AQL statements may be able to express complex extraction semantics that may require hundreds or thousands lines of code. We have formally proved that the class of extraction tasks expressible in AQL is a strict superset of that expressible through cascaded regular automata. We have also empirically demonstrated that it is possible to build extractors that performs comparable to, if not better than, the state-of-the-art systems.

Furthermore, one can implement functionalities not yet available via AQL natively via User Defined Functions (UDFs). For instance, developers can leverage AQL to extract complex features for statistical machine learning algorithms (e.g. named entities), and in turn embed the learned models (e.g. document classification) back into AQL.

Transparency. As a declarative language, AQL allows developers to focus on what to extract rather than how to extract when developing extractors. It enables developers to write extractors in a much more compact manner, with better readability and maintainability. Since all operations are declared explicitly, it is possible to trace a particular result and understand exactly why and how it is produced, and thus to correct a mistake at its source. Moreover, significant research has been put in to leverage machine learning and human-computer interaction to develop cognitive assistants in extractor development, debugging, deployment and maintenance (e.g. [1 – 7, 9, 10]).

SystemT: What’s Next.

SystemT is at the core of multiple IBM products and powering many customer solutions, from CRM, computational marketing, brand management, compliance, to risk analysis and drug discovery. We are actively working on enhancing its core capabilities (e.g. multilingual support) as well as building better development tooling. We are also developing novel enterprise applications and services to take full advantage of SystemT.

One major focus of our future work is to empower more people, particularly students, to learn and use information extraction to solve real-world tasks. As part of the efforts, we have created and taught a graduate-level course that has been adapted in multiple university courses.

You can learn more about our ongoing research and education efforts on our website.

[1] Laura Chiticariu, Yunyao Li and Frederick Reiss. Transparent Machine Learning for Information Extraction: State-of-the-Art and the Future. EMNLP (Tutorial), 2015

[2] Laura Chiticariu, Vivian Chu, Sajib Dasgupta, Thilo W Goetz, Howard Ho, Rajasekar Krishnamurthy, Alexander Lang, Yunyao Li, Bin Liu, Sriram Raghavan. The SystemT IDE: an integrated development environment for information extraction rules. SIGMOD, 2011

[3] Yunyao Li, Laura Chiticariu, Huahai Yang, Frederick Reiss, Arnaldo Carreno-Fuentes . WizIE: A Best Practices Guided Development Environment for Information Extraction. ACL 2012

[4] Yunyao Li, Vivian Chu, Sebastian Blohm, Huaiyu Zhu, Howard Ho. Facilitating pattern discovery for relation extraction with semantic-signature-based clustering. CIKM, 2011

[5] Yunyao Li, Frederick Reiss, Laura Chiticariu: SystemT: A Declarative Information Extraction System. ACL 2011

[6] Yunyao Li, Elmer Kim, Marc A. Touchette, Ramiya Venkatachalam, Hao Wang. VINERy: A Visual IDE for Information Extraction. PVLDB 8(12): 1948-1959 (2015)

[7] Bin Liu, Laura Chiticariu, Vivian Chu, H. V. Jagadish, Frederick Reiss. Refining Information Extraction Rules using Data Provenance. IEEE Data Eng. Bull. 33(3), 17-24, Citeseer, 2010

[8] Frederick Reiss, Sriram Raghavan, Rajasekar Krishnamurthy, Huaiyu Zhu, Shivakumar Vaithyanathan. An algebraic approach to rule-based information extraction. ICDE 2008

[9] Sudeepa Roy, Laura Chiticariu, Vitaly Feldman, Frederick Reiss, Huaiyu Zhu. Provenance-based dictionary refinement in information extraction. SIGMOD 2013

[10] Huahai Yang, Daina Pupons-Wickham, Laura Chiticariu, Yunyao Li, Benjamin Nguyen, Arnaldo Carreno-Fuentes. I can do text analytics!: designing development tools for novice developers. CHI 2013