DataSelf

By Wilco van Ginkel (wvg@seculior.com)

– 26 July 2017

Introduction

In this article, we discuss the concept of DataSelf, Verifiable Trust, and Trust Brokers. It is a summary of our paper ‘DataSelf & Me – Musings on (personal) data privacy, protection & ownership – V2’, which discusses these concepts in more details, including ideas on possible implementations. You can find the paper here.

Datafication

We live in a data driven society, which is here to stay. However, there is increasing fear among people that too much of their (personal) data is being collected and used without their consent. The lack of transparency of what is actually done with their data, who has access, when, and from where, adds more fuel to this fear. This is especially true as we become more datafied (i.e., aspects of our (physical) world are rendered into data, like GPS coordinates for location-aware services or our Facebook “likes”).

Not all data is created equal, however, and we can group data into three types:

- Volunteered Data (VD) – Created and explicitly shared by individuals (e.g., social network profiles).

- Observed Data (OD) – Captured by recording the actions of individuals (e.g., location data when using cell phones).

- Inferred Data (ID) – Data about individuals based on analysis of volunteered or observed information (e.g., credit scores).

The datafication level also differs between the data types. VD is the least datafied, OD is somewhere in the middle, and ID is the prime candidate for datafication. The consequence of becoming more datafied is that we are losing control of our data. We have the most control over VD and the least over ID.

DataSelf

DataSelf is about putting YOU (Self) back in control of your DATA. A few (abstract) characteristics of DataSelf are:

- Secure, personal data repository. Data only lives in your repository and not in 1,000+ databases.

- The data is consumable, using a well-defined and easy-to-use interface.

- Access to the data is fine-grained and automatically scalable.

- Response driven data flow, meaning data access will only be provided subject to an authorized response and subject to user-defined constraints.

Let’s discuss an example to make this more concrete: health care data. Assume you have a secure data repository with all your health care data. Access to this data is certainly not an ‘one-access-fits-all’ scenario. We might distinguish between your family MD, your health insurance company, and your pharmacist. When your family MD needs your health data, you might want to give full access to this data, as you trust your family MD. However, when your insurance company needs your health data, you only provide access to the relevant subset for, say, a particular claim (e.g., your family MD’s prescription and your bank account information to get reimbursed). Your pharmacist, finally, gets access to yet a different subset, which contains data, which is relevant for a particular prescription (e.g., data about your allergies and other drugs you use at the same time).

Custodians & Verifiable Trust

DataSelf definitely comes with its own set of challenges, like:

- Decision making à Can every individual make a decision about his/her data all the time?

- Usability à how easy is it to make an informed decision about who should have access to your data, when and how?

- Scalability à implementing this on a larger scale, how do we ensure it is (automatically) scalable?

- Security à as DataSelf is all about data privacy, how do we actually (technically) protect this?

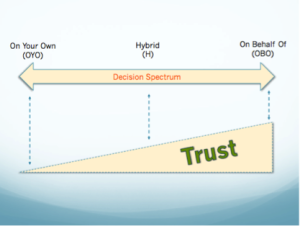

Custodians can assist us in the decision making process. A custodian can be a human or a machine (e.g., software agent) actor. The decision spectrum can be shown as follows:

On the left hand of the spectrum, we see ‘OYO’, which means that an individual is capable of making his/her own DataSelf decisions. On the right hand, we see ‘OBO’, which requires a fair amount of trust, given that DataSelf decisions are made by a custodian. In the middle, we find ‘H’, which is a combination of ‘OYO’ and ‘OBO’. Meaning that in some situations, an individual will make his/her own DataSelf decisions (‘OYO’), while in other situations, he/she lets a custodian do this (‘OBO’). It is obvious that we need to establish more trust in case of ‘OBO’ than in case ‘OYO’. The motto is “Trust, but verify”. As such, we need to develop Verifiable Trust, with characteristics like:

- Privacy by design

- Transparency

- Enforceable

When talking about custodians, an immediate question is: how many custodians do we need? We don’t want to end up with one specific custodian for every decision, as that is unmanageable. Nor do we want one custodian, who decides on all decisions, no matter the context. As some contexts/situations may require a certain level of expertise in order to make a decision. We have to keep it manageable and scalable.

Trust Brokers

Instead of having to deal with (a lot of) individual custodians, we can select a few Trust Brokers. They will do the hard labor and verify the trust of the different custodians on our behalf. You can think of a Trust Broker as a special kind of Custodian for that matter. An important remark we want to make is a Trust Broker doesn’t resolve the underlying fundamental problem, which is that we also need to verify the trust of the Trust Broker (“Who is controlling the police”). But if we limit ourselves to just a few Trust Brokers, which in their turn, verify the trust relations with all the custodians on our behalf, the scope/scale is much more manageable. Based on a pre-defined trust level, Trust Brokers could advise us to use custodian X for a certain task and we can follow its advise (or not). We can even put more trust in the Trust Broker and let the Trust Broker act on our behalf (e.g., custodian selection, act as a decision making proxy towards a custodian to render a service).