The Prominence of Generative AI in CyberSecurity

By Ramesh Chitor, Ramasubramanian Ramani, Deepti Krishnan Iyer

Abstract This paper describes best practices to scale your CyberSecurity Practice with Generative AI technologies leveraging foundational models and Active Learning. We term this Active Learning CyberOps with AI and human experts working together. We describe how an enterprise can build customized foundational models (student models) using their own data with minimal prompt engineering against publicly available models thus detecting cyber attacks much earlier. Further, they can simulate cyber attacks using synthetic data generation and improving their custom models and response automation tools & processes. We apply enterprise best practices for deployment and showcase how this can help customers centralize visibility and control, detect targets, and improve security across their entire platform.

Keywords: CyberSecurity, Artificial Intelligence (AI), Enterprise Security, Foundational Models, Large Language Models (LLMs)

1 Abstract

The rapid rise in AI technologies and the emergence of disruptive technologies like ChatGPT, can be attributed to the exponential rise of data, due to the multitude of devices, with data modalities of voice, video, and text. This has led to rapid development of AI technologies such as GPT4, LLaMa, PaLM, and so on. This is the era of Generative AI.

With readily available GPUs, and Tensor Processing Units (TPUs) with open-source software that bolsters data processing and AI-related work with AI based coding assistants powered by large language models (LLMs), anyone can now build a smart AI system – anyone, anyone!

It has become harder for enterprises to protect their key data assets, making the burning problem of Cybersecurity into a complex nightmare.

How do we provide the guardrails for protecting consumers and enterprises from cybersecurity criminals?

In this paper, we describe a new approach that uses generative AI and self-supervised learning (no need for labeled data) with human experts in the loop to proactively prevent attacks. With repeated iterations using foundation models (images, voice, videos) along with human experts hand in hand, we stay ahead of the bad actors. We term this Active Learning CyberOps. We showcase advanced security use-cases within enterprises and describe techniques for Threat Detection, Threat Simulation and Response Activation. For simulation, we apply generative AI to generate synthetic data and use it to benchmark results – we propose extending the ‘Chaos Monkey’ framework for generating simulation datasets. For Detection and Response automation, we describe building a customized student foundation model trained using an enterprise’ internal data sources and simulated data deployed using best infrastructure security practices.

This document is appropriate for all levels of the audience.

2 State of the CyberSecurity and Artificial Intelligence Union

Enterprises, ISVs, Organizations and government entities worldwide are relentlessly innovating and finding better ways to safeguard data and protect against sophisticated bad actors when it comes to CyberSecurity. One of the biggest benefits of Artificial Intelligence (AI) is automating daily tasks to free up humans to focus on more creative or time-intensive work. AI can be used to create or increase the efficiency of scripts for use by cybersecurity engineers or system administrators. AI is an important tool that CyberSecurity practitioners can use to alleviate repetitive and mundane tasks, and it can also provide instructional and training aid for less experienced practitioners.

As cyberattacks get more and more sophisticated, Artificial intelligence (AI) can help under-resourced security operations analysts stay ahead of threats. AI technologies like machine learning and natural language processing, and Large Language Models (LLM) provide rapid insights to cut through the noise of thousands of daily alerts, drastically reducing response times for incident response units.

Generative AI methods and technology possess the potential to revolutionize the software development process by automating mundane tasks and introducing new automation methods. By leveraging generative AI, software developers can reduce the time it takes to develop software while also introducing new concepts and ideas that can be used to create innovative solutions. Generative AI can also help to identify potential problems and get to the root cause of the problem that may arise during the software development process, allowing for quicker turnaround times, fewer errors and enhanced automation.

With CyberSecurity being top of mind, and security principles being leveraged during the early design stage of software, one of the big benefits is secure application development. AI can also help with application monitoring and error eradication with a very low rate of false positives. In our efforts, we used AI to train on the right set of data to identify patterns and derive conclusions much faster. CyberSecurity practitioners that are inundated with a whole bunch of tools, with very little time at their disposal can leverage a similar approach with LLMs to get to their result every so quickly.

This is where User and Entity Behavior Analytics (UEBA) also comes in very handy. User and entity behavior analytics (UEBA) is a cybersecurity solution that uses algorithms and machine learning to detect anomalies in the behavior of not only the users in a corporate network but also the routers, servers, and endpoints in that network. UEBA, used in our efforts, has some Machine Learning (ML) components. Proactive Identity detections for example, are large scale distributed streaming data processing events, where rather than AI/ML, some of our other techniques with UEBA are more accurate and relevant.

For an organization to build its own LLM on a large corpus of public and private data will be prohibitively expensive. If we can train a domain-specific language model on the needed data at a fraction of the cost and an order of magnitude faster, we can experience a degree of prominence with LLMs and Generative AI models, especially with Identity, Threat, Detection and Response (ITDR). We can look at use cases where bringing data to the AI is beneficial and vice versa. Building and leveraging LLMs for domain specific use cases like Banking, Financials, Healthcare and Insurance can aid CyberSecurity practitioners in these respective areas to quickly train their staff and augment solution needs in a short order of time.

The world needs AI research now more than ever to solve real problems with real solutions. AI acts both as a boon and a bane to enterprises, researchers, businesses and cybercriminals alike. Several if not all credit card corporations leverage AI in helping with cybercrime, to prevent fraud proactively. We are at the intersection of AI’s prominence in helping incident response, asset management and intrusion detection is gaining practical relevance in several financial, insurance and banking use cases. When it comes to AI aiding and augmenting defensive CyberSecurity practices, the key is to have a constant and proactive approach in detecting and flushing out threats. As an example, rather than running penetration testing 4 times a year, we need adherence to real-time penetration testing. Several cybersecurity advisory organizations recommend good guidance. If we adopt continuous monitoring and perform assessments in real-time, it can help defend unknown threats as part of the risk assessment framework.

There are several key cybersecurity advisory organizations and the National Institute of Standards and Technology (NIST) that recommend good guidance. Like if we adopt continuous monitoring and perform assessments in real-time, it can help us to defend if any known and unknown threats occur, as part of a risk assessment framework.

AI can directly help several CyberSecurity solutions from protecting against cyberattacks, data breaches, data and network intrusion. By eliminating or just by largely reducing human errors, the reputation of an organization improves and in most cases recovery time improves after a breach. Unauthorized user access can be prevented, and regulatory compliance is achieved. Also, unauthorized user access is prevented by securing active directory and securing enterprise identities.

In cyber security, artificial intelligence proves to be beneficial as it improves the way security experts analyze, study, and understand cybercrime. It improves the technologies that companies use to combat cybercriminals and helps enterprises keep customer data safe. On the other hand, AI can be an exhaustive resource and may not be practically applicable in every application.

It is nearly impossible for humans to identify and block all the threats faced by an enterprise because of the fact that every year, hackers find a different way to launch various types of attacks that have a distinct objective. In earlier times log4j was not known though it was present from the beginning until reintroduced in December 2021. The network can suffer massive damage with the introduction of these new types of unknown threats and they can have a deep impact on the organization if you fail to detect, identify, and prevent them. This is why it’s very important for all entities, enterprises, and research to have an ITDR strategy. Artificial Intelligence is faster and can help us assess our systems more quickly than the cybersecurity personnel, thereby decreasing our workload and increasing our problem-solving ability by order of magnitude as it identifies the weak points in computer systems and business networks and helps businesses to focus on more important security-related tasks. This makes it possible to manage vulnerabilities and secure business systems in real time.

It becomes important to take a look at the historical advances in AI that have helped in Cyber Security use cases. For example, we can look at what solutions can be combined together to make it compelling for enterprises to adopt a robust prevent and detect CyberSecurity strategy.

3 Historic AI Developments

The journey of AI traces back to the 1950s when Alan Turing introduced the concept of machines mimicking human intelligence. The Turing Test, designed to assess a machine’s ability to exhibit intelligent behavior equivalent to, or indistinguishable from, human behavior, set the stage for what we today refer to as AI (Turing, 1950). This was the seed that sparked a revolution, the impact of which we continue to experience today.

The Dartmouth Conference in 1956 marked the official birth of AI as a distinct field of research. The pioneering figures – John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon – put forth the assertion that “every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it” (McCarthy et al., 1955). This idea fueled the inception of AI, laying the groundwork for the development of AI as a distinct field of research. In the same timeline, McCarthy invented Lisp, which became the favored programming language for AI, chiefly because of its computational efficiency. Concurrently, heuristic problem-solving came into existence with the development of the first AI program, the Logic Theorist, by Herbert Simon and Allen Newell.

In the years that followed, AI researchers made significant strides in natural language processing (NLP), a critical component of the modern generative AI that stands central to our cybersecurity approaches. A milestone was achieved with the development of ELIZA by Joseph Weizenbaum at the MIT AI lab in the mid-1960s, a computer program capable of processing natural language and simulating a psychotherapist. This work emphasized the capacity for machines to understand and respond to human language, thereby broadening the potential scope of AI applications in various fields, including cybersecurity (Weizenbaum, 1966).

With the proliferation of the internet, the significance of cybersecurity soared. The 90s and 2000s saw a paradigm shift in the application of AI for cybersecurity, with spam filtering, intrusion detection, and virus scanning becoming the mainstay applications. During the 1990s, Bayesian networks—a generative model type—gained traction in AI research due to their capability to manage uncertainty and model complex variable interactions. These models were found in cybersecurity for probabilistic risk assessment and intrusion detection, indicating generative AI’s initial application in the sector (Kotenko and Stepashkin, 2006).

The 2000s observed machine learning techniques’ marked ascendancy in cybersecurity, mainly in anomaly detection and spam filtering. Generative models like naive Bayes classifiers became popular for their simplicity and efficacy. The recent decade witnessed significant advancements in AI, punctuated by the introduction of deep learning and Generative Adversarial Networks (GANs) by Goodfellow et al. in 2014. As a generative model type, GANs can create new data instances similar to the training data and have found myriad applications in cybersecurity, such as generating synthetic phishing emails for training, creating realistic network traffic data for testing intrusion detection systems, and simulating diverse types of cyber-attacks (Goodfellow et al., 2014).

Recently, Large Language Models (LLMs), such as GPT-3, have seen increased application in cybersecurity. Their use in generating realistic phishing emails, automating custom security responses, and assisting in writing security protocols signifies the enhanced capabilities of generative AI in cybersecurity. With the ongoing development of Large Language Models (LLMs) and their increasing application in cybersecurity, we stand on the precipice of a new era in which generative AI plays a pivotal role in maintaining our digital safety and security.

In the realm of cybersecurity, leading research institutions and industry pioneers have been actively exploring the integration of Large Language Models (LLMs) to advance the field. Google, a global technology giant, has emerged as a frontrunner in leveraging LLMs for cybersecurity research and development. The company’s extensive work in this domain spans various areas, including threat detection, anomaly analysis, and response automation, contributing significantly to the advancement of generative AI technologies (Google AI). Google’s AI research division has been at the forefront of exploring the potential of LLMs in addressing cybersecurity challenges. The company’s researchers have conducted in-depth studies, employing rigorous research methodologies, to evaluate the effectiveness of LLMs in enhancing threat detection capabilities and automating response processes. By leveraging vast amounts of data and advanced machine learning techniques, Google has demonstrated the practical applications of LLMs in improving overall cybersecurity resilience (Google AI).

Carnegie Mellon University, known for its expertise in cybersecurity, has been at the forefront of exploring the potential of LLMs in addressing cybersecurity challenges. Through their renowned CyLab Security and Privacy Institute, researchers at Carnegie Mellon have conducted extensive studies and developed innovative approaches to leverage LLMs in various aspects of cybersecurity. One area of their research focuses on using LLMs for threat detection, where the models are trained to analyze vast amounts of data, including textual information from network logs, system alerts, and security incident reports. By applying advanced machine learning techniques and utilizing LLMs, Carnegie Mellon researchers have been able to enhance threat detection capabilities, improving the accuracy and efficiency of identifying potential cyber threats (Carnegie Mellon University).

Furthermore, Carnegie Mellon’s researchers have explored the integration of LLMs in response automation, aiming to streamline incident response processes and reduce response times. By training LLMs on historical incident response data and incorporating natural language understanding capabilities, the researchers have developed models that can generate automated responses to specific types of security incidents. These automated responses can help organizations swiftly mitigate threats, minimize the impact of security incidents, and improve overall cybersecurity resilience (Carnegie Mellon University).

Similarly, the University of Wisconsin-Madison’s Wisconsin Security Research Consortium has played a pivotal role in advancing the integration of LLMs into cybersecurity practices. The consortium’s researchers have conducted groundbreaking research, with a strong focus on real-world applications and addressing evolving cyber threats. Their studies have explored the potential of LLMs in enhancing threat detection capabilities by leveraging advanced natural language processing techniques. By training LLMs on large datasets of security-related textual information, including threat intelligence reports, security advisories, and incident response guidelines, the researchers have demonstrated how LLMs can effectively identify patterns, extract relevant insights, and provide valuable information for threat detection (University of Wisconsin-Madison). Additionally, the University of Wisconsin-Madison’s researchers have investigated the use of LLMs for response automation in cybersecurity. By developing customized LLM models and training them on historical incident response data, they have been able to automate certain aspects of incident response, such as generating preliminary incident reports, suggesting response actions, and providing context-aware recommendations. These advancements in response automation have the potential to significantly improve the efficiency and effectiveness of incident response processes, allowing organizations to respond swiftly to security incidents and mitigate potential damages (University of Wisconsin-Madison).

The integration of foundational models, like GPT-3 and its successors, has driven AI advancements in cybersecurity across diverse data modalities. These models excel not only in processing and generating text but also in analyzing and understanding images, videos, and voice data. By leveraging foundational models, organizations can enhance threat detection, automate incident response, and strengthen cybersecurity defenses across various data types, fortifying their ability to safeguard critical assets against evolving cyber threats.

These research institutions have been instrumental in pushing the boundaries of LLM applications in cybersecurity. Their studies, backed by rigorous research methodologies, have demonstrated the effectiveness of LLMs in enhancing threat detection capabilities, automating response processes, and improving overall cybersecurity resilience. By leveraging LLMs and advanced machine learning techniques, these institutions have paved the way for more proactive and adaptive cybersecurity approaches. The integration of LLMs in cybersecurity practices holds immense potential for organizations to effectively address emerging cyber threats and safeguard their digital assets.

Furthermore, industry leaders like Microsoft have made significant investments in leveraging LLMs to enhance cybersecurity. Microsoft’s research and development efforts have focused on utilizing LLMs to detect threats, analyze the behaviors of advanced persistent threats, and improve incident response capabilities (Microsoft AI). Their commitment to advancing the field of generative AI in cybersecurity underscores the potential and practicality of LLM integration in real-world scenarios.

The historical advancements in AI, coupled with the integration of LLMs in cybersecurity practices, have laid a solid foundation for enhancing threat detection, response automation, and overall cybersecurity posture. By leveraging the capabilities of LLMs and the insights gained from leading research institutions and industry pioneers, organizations can better protect their data, networks, and critical assets from evolving cyber threats. The ongoing development and application of LLMs in cybersecurity promises a future where generative AI technologies play a central role in maintaining digital safety and security.

4 Insufficiencies of existing AI solutions for CyberSecurity

AI-driven Cybersecurity systems, by definition, rely on machine learning algorithms that learn from historical data. However, this can lead to many false positives when the system encounters new, unknown threats that do not fit into existing patterns. Also, most modern day Cybersecurity systems lack coordination between private, public, the government and research entities. During our research we found a glaring gap when it comes to being inclusive with leading research organizations.

When you compare Cybercrime as an economy, it comes 3rd in size to the United States and China. It’s hard for smaller countries, SMBs and other small entities to take on the bad actors by themselves. Hence, the need to cooperate and work together as “one-unit” to fight against the bad actors. Careless errors committed by employees exposing entities to CyberRisks. In protecting data privacy, the fewer people handling the data, the better. Creating the infrastructure for implementing AI in cybersecurity is neither easy nor cheap. This requires high technical skills that are synonymous with tech experts. You may not be able to manage the AI technology yourself and will have to outsource it to third-party vendors, adding more dimensions for the risk. While the vendors managing your cybersecurity with AI technology may assure you of their confidentiality, the fact is, you cannot take their word for it, and need to ensure highest levels of security from your side.. Also, per compliance, make sure vendors cannot use your data for their own interests, outside your arrangements with them.

Learning and sharing together about threat vectors and vulnerabilities holds the key. If we view AI from the cyber security standpoint, it can be risky, in some cases, to share vulnerabilities among industry partners, both the public and private sector and the Government. With AI being used in incredibly personal use cases like credit scoring, housing, it becomes imperative to talk about it openly. What we need is to strike a very healthy balance between what is shared and what personal or private information is kept confidential. Collaboration between stakeholders, private and public, with educational institutions as intermediary links, is one way to balance privacy and transparency. In our work we have shared bits and pieces of our project with CMU, Microsoft for example, while making sure nothing proprietary or confidential is compromised. Leaders of organizations like IEEE/ACM, corporations, and government entities need to come together and build a culture that motivates individuals to operate ethically to share challenges and best practices to overcome those challenges. Contemporary practices within corporations of focussing single mindedly on growth and leadership is insufficient as we are failing as a group, nation, world. Not one entity has a monopoly of skills in AI for Cybersecurity. In fact, there is a dearth of jobs for qualified personnel in the marketplace today.

AI and machine learning algorithms can at times be prone to bias and discrimination. This can be a strong concern, in cybersecurity, especially in recent years. If the algorithms are trained on partial data or flawed assumptions, they may make incorrect decisions that can have serious consequences. For example, if a machine learning algorithm is trained to identify potential security threats based on a person’s physical characteristics, it may discriminate against individuals based on race, political view, or gender, which can have profound implications for fairness and justice. This is where we introduce Active Learning CyberOps, a novel method that gets the best of human intelligence and LLMs to proactively look for threats. Also, we need to find a way to break the chain for the bad guys. There is way too much incentive for the bad actors to keep breaking into systems. This problem is only going to get worse and we may just be a few months or a year away from a global intrusion attack that has irrecoverable ramifications and turns into a major catastrophe.. Geopolitical developments and the implementation of emerging AI technologies like ChatGPT have reshaped the cyber-threat potential from several organized attacker groups.

5 Active Learning CyberOps: Let us dig in

Cybersecurity is the only ‘science’ or tech field with a human adversary. Enterprises have specialized experts who have honed their skills over several years. In this section, we dig deeper into the novel approach to leverage human expertise with foundational models for threat detection, threat simulation (proactively prevent attacks) and fine tune the response automation. We explain building a ‘student model’ that is trained using the enterprise data and with minimal prompt engineering against publicly available models. Thus the student model is customized and unique for the customer’s business problem. This student model learns via internal experiments (“self study”) and via prompts from human expertise and responses from other foundational models and LLMs. With iteratively running these experiments, with humans and AI working hand in hand, we stay ahead of the bad actors. This is Active Learning with Humans (ALH) in the loop. When we bring the concepts of ALH and apply it to Cybersecurity, we term this as Active Learning CyberOps. For example, a company that has cybersecurity experts can leverage their years of unique expertise and build a model that can now be scaled to address the different attack surface areas, thus making them run an efficient org.

5.1 CyberOps today with Humans in the loop

CyberSecurity is hard and is a key item in every CIO’s roadmap. Enterprises are at different levels of expertise in this journey. Here, we present a canonical workflow how an enterprise with high end specialists handles cyber security today:

- IT systems are monitored via audit log framework, generated logs are then sent to a centralized system where they are processed for any spurious activity.

- The detection systems can be scripts, code or Machine Learning models trained via custom built datasets / popular threat detection data; or specialized third party software.

- When spurious activity is detected, via unusual pattern alerts are raised for humans to monitor & take action (Response Automation)

- Post mortem is performed and imrpovements for pattern detection logic, or the reasons for breaches are fixed and software systems are deployed (~1 week to 4 weeks).

Such a system has worked well for structured & unstructured text, for example, network logs monitoring where the access pattern across known IP addresses within a company are known, and then raise flags for other situations. Today, the network can be attacked by cyber criminals taking over key systems, causing DDOS attacks, access attachments in emails cause phishing attacks, change the user profile picture & create new doctored images, change the video feed of a presentation, leverage the audio of a key leader and create malicious content and so on.

5.2 Active Learning CyberOps: Threat Detection

Foundational Models (of which LLM are a subset that deal with text) have the feature of pattern finding and this could be utilized to analyze behaviors and tactics of Advanced Persistent Threats in order to better attribute incidents and mitigate them if such patterns are recognised in real-time.

Cybersecurity has canonical patterns for identifying structured & unstructured texts. When these are intermixed with other data modalities such as images, videos and voice, then the existing practices breakdown. This is where foundational models can shine.

- For example, consider intrusion detection analyzing raw unstructured network data analysis which exists today. In addition, let us say the Network security team also has several images from their security & monitoring analytics dashboard which shows the various firewalls, routers, endpoints such as laptops, desktops and mobile devices and there is an image where a user uninstalled the endpoint security software. Using a LLM that can analyze these images, understand the timestamp within them and tie it to the cybersecurity intrusion event.

- Consider another scenario where a mortgage company has an online portal for buyers to complete the contract for the home buying process. Let us say, there is a malicious actor that has a doctored contract. This would cause immense reputational impact and loss to the company. Now with using a LLM to process the ‘contract’ and search for an internal ‘watermark’, the company can detect the doctored contract, flag it and send it to an employee / human expert to validate it.

- Let us say, there is a company that analyzes CCTV footage and provides a protection service for companies. Today, they have humans sitting and watching at these video streams and flag spurious behaviors. Due to human error, the person watching these videos could miss critical events. Instead if they have a specialized customized LLM that is built to detect employees, contractors and vendors, this LLM could also be fed the video stream and it detects any other unapproved humans. This improves the efficiency of the humans analyzing the video streams and reduces the errors.

To combat this, the solution needs to be built with these building blocks:

- Build a scalable inference environment, where each incoming ‘event’ can be validated against the customized model within low latency

- Incorporate a MLOps practice that focuses on security to address cybersecurity threats

- Leverage the various foundational models that work with various modalities such as text, images, audio and video data set

- Directly consuming foundational models for CyberSecurity

- Building customized AI systems using prompt engineering

- Keep the human in the loop and build an active learning engine, that ensures that the model is constantly learning and updated based on incoming events

Emergence of the Student model and foundational model frameworks

The publicly available foundational models and LLMs (for text) today are built with generic training data to address common problems. In many cases, it is not clear the datasets that was used to train them. Though, Cybersecurity patterns may be generalized to a set of common problems, the specialied solution requires customized approaches. Further, this training of the model would require enterprises to build these customized Student foundational models trained with their internal data sources, applying the best practices of infrastructure security and using intelligent systems.

For instance, Dolly, a large language model (LLM) trained for less than $30 to exhibit ChatGPT-like human interactivity. Dolly 2.0, is the first open source, instruction-following LLM, fine-tuned on a human-generated instruction dataset licensed for research and commercial use. More and more frameworks are now available which are built with smaller relevant parameters and not the 100B+ parameters.

A student model is one that learns using an enterprise / domain specific data that learns from human experts in the loop and leverages publicly available models as trigger points. The student model runs several internal experiments. As part of Active Learning CyberOps, these student models run their intelligent smart loops (self study), then get evaluated by the human expert as part of the experiment phase and use LLM as external prompts to enrich their intelligence.

In our pilot implementation, we apply this concept for a select set of Cyber security use-cases described above. We are seeing that benchmarking the student model with different datapoints (unstructured text based) are showing promising results. The goal is to expand this to higher volumes and also expand to other data modalities – images, video and voice.

5.3 Active Learning CyberOps: Threat Simulation using Chaos Monkey

Netflix developed thi framework and hardened it several year back and this is responsible for randomly terminating instances in production to ensure that engineers implement their services to be resilient to instance failures.

We extend chaos monkey to have threat simulation built using past breaches (historical data provided by the enterprise) and uses synthetic data generation via Generative AI techniques for this domain to create specific test cases for cyber breaches. So now the enterprises harden their cybersecurity practices on a daily basis, using this methodology and framework.

We seed an ML model with standard cyber breach events, and then using Artificial Generative AI let the model generate new cyber breach events for network security, data systems security, application security, devices security and so on. The chaos monkey framework uses this generated data and “attacks” the deployment. Since this is controlled by the IT team and the CyberOps team, they are able to take action and learn from new surface attack areas.

In our pilot implementation, we will be benchmarking the results and add this as feedback loop to the Student model and accelerate it’s learning cycle.

5.4 Active Learning CyberOps:Response Automation

One of the key aspects of CyberOps is the response when an event occurs. Enterprises have their custom developed code and processes. LLMs have potential in the Security Operations Center and response automation for unstructured texts. Scripts, tools, and possibly even reports can be written using these models, reducing the total amount of time professionals require to do their work.

As shown in detection, we apply Active Learning CyberOps to a set of advanced security use-cases within enterprises and describe using readily available LLMs an enterprise can detect attacks much earlier. Such an approach would help customers centralize visibility and control, detect targets, and improve security across their entire platform.

Response Automation can be complex when you are an ISV with several customers deploying your SaaS product. For example, assume you are a password management solution and you have several consumers & enterprise customers who are using it today. When there is a password breach, there is panic in identifying the impacted customers and notifying them of the impact etc. The situation can be similar for a banking application.

From a solution architecture standpoint, there is usually a control plane of your application that is deployed in the ISVs cloud environment and the data plane in various customers’ environments. When a cyber attack happens within the ISVs control plane, the typical workflow today is:

- Understanding the attack surface area and completely shutting off the application

- Analyzing the threats running through the various AI systems

- Creating custom responses for each impacted consumer(s) and enterprise customers.

In our design, we are incorporating this and looking at benchmarking this further.

5.5 Active Learning CyberOps: Bringing it all together

Enterprises guard access (and rightfully so) to publicly available Foundational Models and LLMs since the prompts sent by employees might be using enterprise IP / data. An emerging pattern is to be selective in exposing the enterprise data and abstracting over a semantic index. Here is one of the patterns for this approach that is demonstrated by Microsoft.

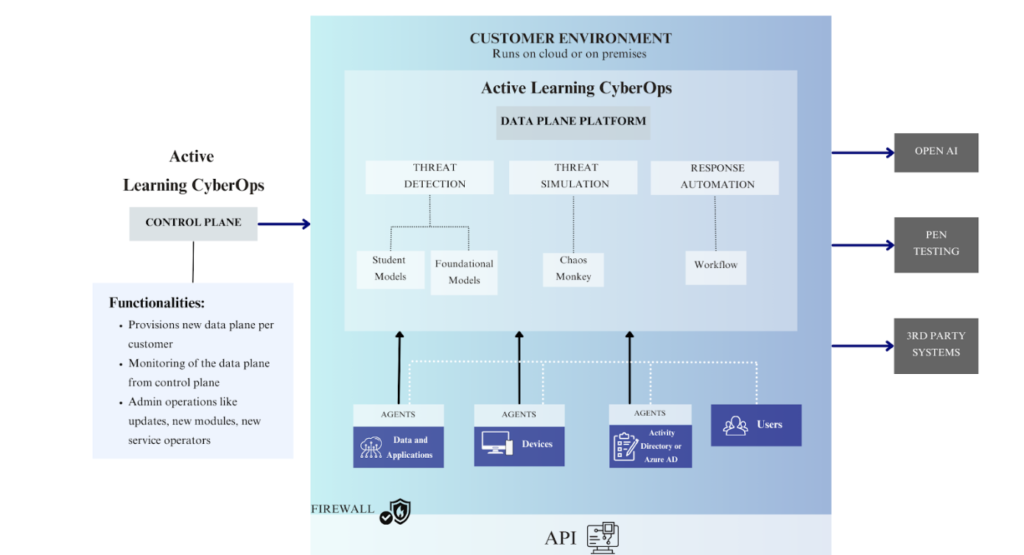

It is also key to use the best of breed, secure deployment topologies and best practices, clearly separating out the control plane (runs in ISV cloud deployment) and data plane (that would run in customer’s cloud or on-premises deployment).

Active Learning CyberOps has an agent framework which gets deployed in customers’ data & application services, devices and security systems. These agents securely communicate with the data plane components. There are security models that would check if the agents are breached and each agent node is checked by it’s neighbors to ensure that it is not compromised.

The control plane gets secure messages from the deployment, and the ISV gets to monitor the end-to-end system across all customers. Here is the message that show cases the architecture and the deployment topology.

Fig. 1. Illustration of a secured architecture within the enterprise

6. Conclusion

On the one hand, quantum computers will disrupt many of the systems we rely upon today, forcing a wholesale move to new solutions and algorithms. On the other hand, quantum technology will allow us to achieve unprecedented levels of cyber security in many aspects of cryptography, by vastly eliminating threats at an early stage with accurate detection. Novel technology and methods like CyberOps AI will gain traction as we progress with newer use cases in Generative AI for CyberSecurity. As more of our lives move online for longer periods of the day, cyber attacks will increase exponentially and the bad actors will try to influence election results, disrupt major supply chain systems like Colonial Pipeline, steal intellectual property and generally disrupt a target nation. The victims of attacks are not always the intended targets, collateral damage is a common issue, especially when malware are used to spread exploits indiscriminately. It is of paramount importance to combine efforts of private, public, research institutions and the government to counter the next big cyber pandemic. The authors are working on prototypes for Cyber Ops AI for asset management, phishing, automatic vulnerability tests for all known Cyberevents use cases to date. We look forward to closer collaboration with institutions like CERN, CDAC, IEEE, ACM, CMU, University of Wisconsin, Madison and interested subject matter experts in this emerging space.

Acknowledgements

The authors would like to appreciate their sincere gratitude and thank the following individuals for their invaluable contributions as reviewers and continued supporters of this research.

Michael Lisanti, Director of Partnerships at Carnegie Mellon University, CyLab

Wolfgang Pauli, Principal AI Research Engineer at Microsoft Corporation

Sanjay Mishra, Head of Product, Azure SQL and SQL Server at Microsoft Corporation

References

- Turing, A. M. (1950). Computing Machinery and Intelligence. Mind, 59(236), 433-460.

- McCarthy, J., Minsky, M., Rochester, N., & Shannon, C. E. (1955). A Proposal for the Dartmouth Summer Research Project on Artificial Intelligence. Retrieved from http://www-formal.stanford.edu/jmc/history/dartmouth/dartmouth.html

- Weizenbaum, J. (1966). ELIZA—a computer program for the study of natural language communication between man and machine. Communications of the ACM, 9(1), 36-45.

- Goodfellow, I., et al. (2014). Generative Adversarial Networks. In Proceedings of the 27th International Conference on Neural Information Processing Systems (NIPS’14), 2672-2680.

- Kotenko, I., & Stepashkin, D. (2006). Risk Assessment with Bayesian Networks: An Introduction to the BayesiaLab Software Package. In Proceedings of the 1st International Conference on Availability, Reliability and Security (ARES’06), 453-460.

- Carnegie Mellon University. CyLab Security and Privacy Institute. Retrieved from https://www.cylab.cmu.edu/

- University of Wisconsin-Madison. The Wisconsin Security Research Consortium. Retrieved from https://wisr.io/

- Microsoft AI Applied AI Blog: “Revolutionize Your Enterprise Data with ChatGPT: Next-Gen Apps” [Online]. Available: [https://techcommunity.microsoft.com/t5/ai-applied-ai-blog/revolutionize-your-enterprise-data-with-chatgpt-next-gen-apps-w/ba-p/3762087]

- “Netflix Chaos Monkey.” Netflix. [Online]. Available: https://netflix.github.io/chaosmonkey/

- Databricks Blog. “Dolly: First Open Commercially Viable Instruction-Tuned LLM.” Databricks, 12 Apr. 2023. [Online]. Available: https://www.databricks.com/blog/2023/04/12/dolly-first-open-commercially-viable-instruction-tuned-llm

- Active Learning Engine using GPT https://github.com/Azure/GPT_ALE

- Cost of a Data Breach Report 2022 https://www.ibm.com/downloads/cas/3R8N1DZJ

- Gartner Identifies the Top Cybersecurity Trends for 2023 Gartner Identifies the Top Cybersecurity Trends for 2023

- CyLAB https://en.wikipedia.org/wiki/Carnegie_Mellon_CyLab

- Cybersecurity Moonshot http://web.mit.edu/ha22286/www/papers/IEEESP21_2.pdf

- CISA https://fedscoop.com/cisa-and-partners-issue-secure-by-design-principles-for-software-manufacturers/

- Crowdstrike CEO George Kurtz testifies https://www.intelligence.senate.gov/sites/default/files/documents/os-gkurtz-022321.pdf

- Cybersecurity Tools https://www.techrepublic.com/article/even-with-cybersecurity-tools-deployed/amp/

- The MITRE corporation https://www.mitre.org/

The Defense Advanced Research Projects Agency (DARPA) https://www.darpa.mil/