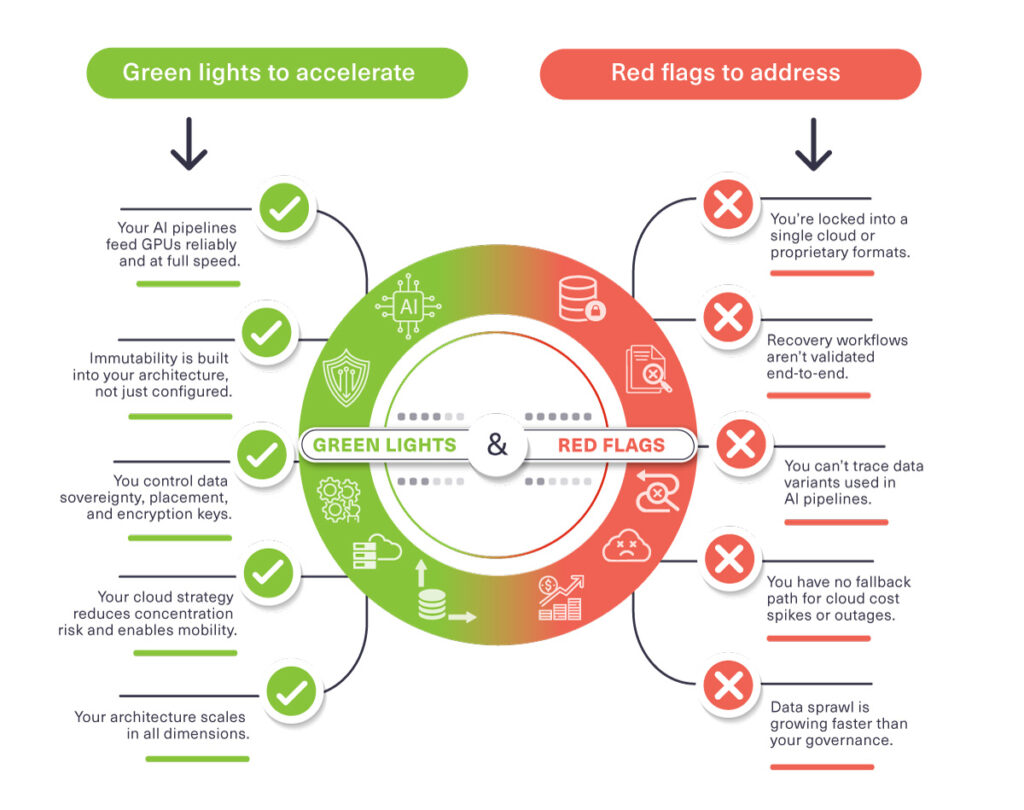

On Assessing Data Readiness using the key signals 2025 revealed. Q&A with Paul Speciale

Q1. The scorecard makes it clear that 2025 still exposed fundamental weaknesses in how organizations approach data resilience, from untested recovery plans to unverified AI data lineage. When you talk to IT leaders about these “red flags,” what’s the biggest psychological barrier you encounter? Is it the assumption that existing backup systems are “good enough,” or is there something deeper about how enterprises have traditionally approached infrastructure planning that needs to fundamentally change?

The primary challenge is less about complacency around backups and more about entrenched legacy infrastructure mindsets. Historically, enterprises have approached data resilience against cyber-threats as a secondary consideration. The focus has been on storage capacity utilization and costs, minimizing backup windows and routine IT operations rather than treating resilience as a core, continuously validated capability. As a result, even well-resourced organizations often lack fully verified recovery pathways, reproducible AI data lineage, and end-to-end immutability, leaving them exposed to hidden risks despite the perception of “adequate” systems.

Q2. You identify vendor lock-in and cloud concentration as critical red flags, yet many organizations have invested millions in their current cloud architectures. When an organization realizes they’ve built on a foundation that restricts data mobility or creates single-point-of-failure dependencies, what does the realistic path forward look like? How do you help leadership teams navigate the tension between acknowledging sunk costs and the escalating risk of continuing down that path?

A pragmatic path forward involves agreement to decouple key parts of the infrastructure stack, to enable independent optimizations. For example, decoupling compute resources from storage, adopting open protocol standards (such as S3-compatible storage), and establishing at least one independent fallback environment. Leadership must balance sunk costs against rising risk, positioning mobility and multi-cloud flexibility as a form of insurance: strategic investments today mitigate operational and regulatory exposure tomorrow. The implementation should be phased, and begin with critical workloads and progressively extend portability, without the need for immediate, full-scale replatforming.

Q3. The OVHcloud court ruling you cite represents a significant shift, proving that contractual data residency no longer satisfies jurisdictional requirements. For multinational organizations that have been relying on contractual assurances for years, this creates an immediate governance crisis. What are you seeing in terms of how enterprises are responding to this? Are there specific industries or regions where this shift is causing the most disruption, and what does “proof of control” actually look like in practice?

Enterprises are shifting from reliance on contractual assurances to demanding operational evidence: region-specific placement, customer-managed encryption keys, audit-ready data lineage, and independent mobility across cloud, on-prem, and edge environments. Regulated industries in Europe, the Middle East, and North America are under the greatest pressure, as courts and regulators increasingly require demonstrable control rather than trust in provider agreements. “Proof of control” entails verifiable tracking of data location, movement, and access permissions.

Q4. Your scorecard distinguishes between organizations that are genuinely AI-ready, with high-throughput, governed data pipelines and reproducible lineage, and those whose infrastructure can’t actually support reliable AI outcomes. Given the pressure on every enterprise to demonstrate AI progress, how do you cut through the hype when assessing whether an organization’s data foundation can truly support production AI? What specific technical debt or architectural gaps tend to surprise leadership teams who believed they were already AI-ready?

True AI readiness requires optimizing for fast data access, with low-latency and high-throughput, and lineage-aware pipelines capable of delivering reproducible outcomes. Leaders are frequently surprised by gaps such as limited concurrency, small-object performance bottlenecks, untracked dataset versions, shadow copies, and manual preprocessing workflows. Effective evaluation moves beyond model size or GPU utilization, instead focusing on measurable throughput, completeness of data lineage, reproducibility, and governance controls.

Q5. The scorecard emphasizes deep immutability, disaggregated architecture, and cloud-smart resilience, all of which require investment. In a macroeconomic environment where CFOs are scrutinizing every IT expenditure, how do you help organizations quantify the ROI of resilience? Is there a tipping point or specific event (whether it’s an outage, a cyber incident, or a failed audit) that finally makes these investments non-negotiable, or have you found ways to build the business case proactively before a crisis strikes?

Resilience ROI is best framed as risk mitigation: reducing downtime, avoiding compliance penalties, shortening recovery times, and ensuring AI reliability. While outages, cyber incidents, or audit failures create immediate urgency due to the operational impact and cost, proactive business cases rely on measurable metrics such as time-to-clean-restore, verifiable lineage coverage, and multi-site immutability to demonstrate that the cost of inaction outweighs the investment in prevention. Tipping points vary by context, but the most compelling cases align operational reliability with regulatory and reputational protection.

Resources

THE 2026 DATA READINESS SCORECARD Assess your data readiness using the key signals 2025 revealed.

………………………………………………..

Paul Speciale, CMO Scality

Over 20 years of experience in Technology Marketing & Product Management. Key member of team at four high-profile startup companies and two fortune 500 companies.

Sponsored by Scality