“ The AI Factory is a revolutionary concept aimed at streamlining the creation and deployment of AI models within organizations. It serves as a centralized hub where data scientists, engineers, and domain experts collaborate to develop, train, and deploy AI solutions at scale.“

Q1. You joined KX as Chief Executive Officer in August 2022 (*). How has the database and the AI market developed since then?

Ashok Reddy: Since taking on the role of Chief Executive Officer at KX in August 2022, I have observed significant transformations within the database and AI markets, underscored by burgeoning investments and innovations across several key segments:

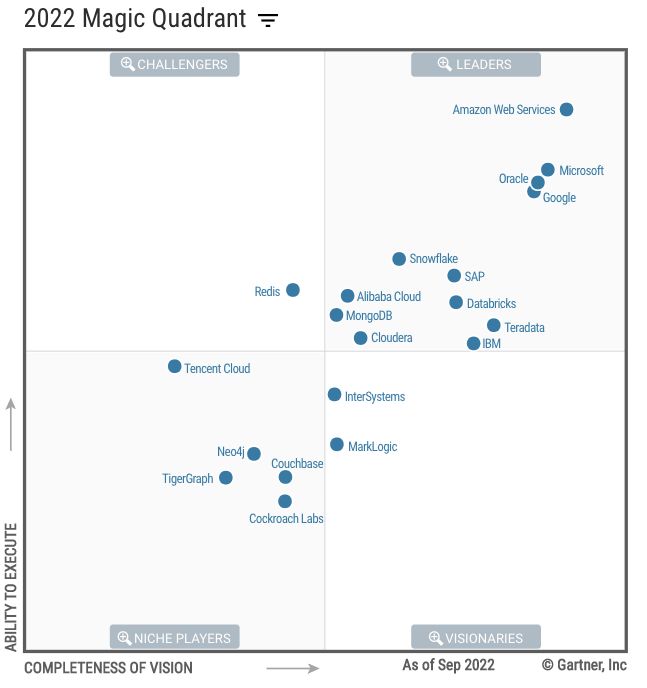

Non-relational Databases (NRDBMSs): These databases have experienced notable growth, driven by the demand for flexible, scalable data management systems that accommodate the complex needs of modern, data-intensive applications.

Analytics and Business Intelligence Platforms: This segment has continued to expand rapidly, fueled by the increasing need for sophisticated analytical tools that can provide deeper insights into vast datasets, enabling more informed decision-making processes.

Data Science and AI Platforms: The emergence and integration of advanced data science and Generative AI (GenAI) technologies have propelled this sector forward, with organizations seeking powerful platforms that can drive AI-driven innovation and operational efficiency.

These industry segments have showcased annual growth rates ranging from 20 to 25%, highlighting a substantial shift towards technologies that are not only agile and scalable but also capable of underpinning advanced analytics and AI-driven applications.

Q2. It seems that securing a tangible return on investment from artificial intelligence (AI) is still a challenge. Do you agree? How can you ensure your AI has an ROI?

Ashok Reddy: Yes, achieving a tangible return on investment from artificial intelligence (AI) poses a challenge, but it’s not unattainable. AI, fundamentally a prediction technology as highlighted in “Power and Prediction” by Ajay Agrawal, Avi Goldfarb, and Joshua Gans, and “Competing in the Age of AI” by Karim R. Lakhani and Marco Iansiti, offers organizations the ability to make informed predictions based on extensive datasets. This capability is key to gaining a competitive advantage.

To ensure AI initiatives yield a tangible ROI, a strategic focus on leveraging prediction technology is crucial. This involves minimizing prediction costs, which can be achieved by reducing the incidence and impact of prediction errors and continually refining AI models to enhance their accuracy. By strategically lowering these costs, companies can boost operational efficiency, foster innovation, and elevate revenues.

Moreover, viewing AI’s predictive prowess as a strategic asset allows for the alignment of AI endeavors with specific business goals. Whether the aim is to streamline operational processes, improve customer experiences, or explore new market opportunities, the predictive capacity of AI can be effectively utilized to achieve concrete business results.

Q3. What is the AI Factory concept, and what is its significance?

Ashok Reddy: The AI Factory is a revolutionary concept aimed at streamlining the creation and deployment of AI models within organizations. It serves as a centralized hub where data scientists, engineers, and domain experts collaborate to develop, train, and deploy AI solutions at scale. The AI Factory is essential for organizations looking to leverage AI effectively across various business functions, enabling them to drive innovation, enhance productivity, and gain a competitive edge.

Q4. How does an AI Factory facilitate the utilization of a single GenAI model across multiple functions and tasks?

Ashok Reddy: An AI Factory empowers organizations to leverage a single GenAI model for a multitude of functions and tasks through systematic processes and automation. By centralizing model development and deployment, the AI Factory ensures consistency, scalability, and reusability of AI solutions. This enables organizations to streamline workflows, optimize resource allocation, and extract maximum value from their AI investments.

Q5. What are your tips for scaling AI in practice?

Ashok Reddy: Adopting an AI Factory approach will enable businesses to scale AI. In practice, this means changing how we think about the typical tasks our workforce is asked to complete and how we apply [AI] technology to support not only the completion of that task but the entire job motion itself. For example, if we can support paralegals to do the document review and preparation that a lawyer typically handles, we’re enabling that lawyer to dedicate more of their time to complex cases thereby revolutionizing the job processes for both sides.

By rethinking how we scale AI within the human workforce, we can empower professionals to pursue roles of greater strategic value, reduce time to market for AI solutions, and ensure consistency and quality across AI initiatives.

Q6. Let’s now look at GenAI. Several specialized companies offer so called GenAI “foundation models”– deep learning algorithms pre-trained on massive amounts of public data. How can enterprises take advantage of such foundation models?

Ashok Reddy: Foundation models provide a massive head start for enterprises, but their value lies in adaptation and thoughtful integration. Some examples of adoption of these models for organizations to consider are:

- Reducing Development Time and Costs: Instead of building complex AI models from scratch, enterprises can fine-tune foundation models for specific tasks, saving significant resources.

- Unlocking New Applications: The flexibility of foundation models (for text, image generation, etc.) enables creative applications, potentially disrupting existing workflows.

- Democratizing AI Access: Smaller businesses and those without in-house AI expertise can leverage pre-trained models via APIs or user-friendly platforms.

- The RAG Connection for Leveraging Private Data: Foundation models are the perfect ‘retrieval’ component of the retrieval-augmented generation (RAG) pipeline. Accessing knowledge at scale becomes easier.

However, to effectively deploy these strategies, enterprise organizations should consider putting a few guardrails in place, such as:

- Domain-Specific Fine-Tuning and Data: Introduce your own high-quality, domain-specific datasets to combat potential irrelevance or bias in the pre-trained model.

- Constraints and Sanity Checks: Embed rules derived from industry knowledge and common sense into your fine-tuned model to avoid unrealistic or undesirable outputs.

- Continuous Evaluation: Don’t treat a foundation model as static. Test regularly against real-world scenarios and adjust as needed

- Human-AI Collaboration: Emphasize explainability, critical evaluation of outputs, and maintaining human oversight. This is vital for trust and responsible deployment.

Q7. Three issues are very sensitive when talking about GenAI: quality and relevance of public data, ethics, and accountability. What is your take on this?

Ashok Reddy: I think these issues are important to consider as we, as a collective industry, work to create expanded capabilities in the area of GenAI.

When it comes to the quality and relevance of public data, the two most important considerations are bias amplification and domain mismatch. First, public datasets often contain biases. Enterprises need rigorous pre-processing and bias mitigation techniques to avoid harmful outputs. This should include work to identify and mitigate biases within the models during development and testing to make sure we’re approaching GenAI creation with a sense of fairness and inclusivity.

With regard to domain mismatch, it’s important to know that data used to train the foundation model may not align with your specific enterprise needs. Fine-tuning and supplementing with your own data is essential.

On the topic of ethics, we’re seeing instances of misinformation and deepfakes in our everyday consumption of news and social media. Organizations should ensure foundation models are not misused for malicious purposes by implementing safeguards and internal policies to guide responsible use. As a society, we need to consume with a discerning eye and make sure we hold creators accountable.

I believe that in the area of accountability, transparency, governance, and continuous monitoring of models are all critical issues to be addressed.

Where possible, aim for some level of transparency in your foundation model’s decisions. This builds trust and helps debug potential issues. Establish clear ownership and protocols for using GenAI within your enterprise, defining acceptable use cases and limitations.

And, as foundation models evolve, so too must your risk assessment and ethical considerations. Therefore, establishing a method for continuous monitoring is key.

To help accomplish oversight in these areas, it’s important that we adopt grounding strategies.

The first way to do this is to use your knowledge base as an anchor, supplementing public data with your curated knowledge base of reliable sources, financial reports, or industry-specific data. This helps the model gain a better understanding of your domain’s facts and principles.

Secondly, you should use RAG for verification. If your foundation model generates text, use a RAG model to cross-check its outputs against trusted knowledge sources, reducing the chance of spreading misinformation.

And finally, make sure to include explainability as a requirement. Prioritize techniques that give insight into why the model generates certain outputs. This helps spot issues and maintain a strong link to reality.

Q8. What staffing approach is required for operating an AI Factory? Is it about hiring new specialists or upskilling existing business and tech roles, or both?

Ashok Reddy: Operating a GenAI Factory requires a strategic approach to staffing that combines both hiring new specialists and upskilling existing talent. While recruiting individuals with expertise in AI, machine learning, and data science is crucial for driving innovation, it’s equally important to invest in upskilling existing business and tech roles. By fostering a culture of continuous learning and development, organizations can ensure that their workforce remains adaptable and proficient in leveraging advanced AI technologies effectively.

Q9. Harvard Business Review (**) reported that “Gartner research has identified five forces that will keep the pressure on executives to keep learning, testing, and investing in the GenAI technology: 1) Board and CEO expectations; 2) Customer expectations; 3) Employee expectations; 4) Regulatory expectations; and 5) Investor expectations.” What does it mean in practice?

Ashok Reddy: Boards and CEOs are looking to implement solutions that have clear data pipelines, experimentation processes and a focus on translating AI insights into actionable decisions, all attributes of a well-structured AI Factory for continuous innovation.

Customers want AI-enhanced experiences. Organizations can work to deploy AI from the Factory for personalized recommendations, intelligent chatbots, and streamlined processes. Constant evaluation and refinement are the keys to success.

When it comes to employees, using AI as a Co-pilot or Assistant can help upskill employees to collaborate with the AI tools developed in their AI Factory. This reduces mundane tasks and fosters a sense of empowerment.

When it comes to regulatory expectations, you can look to explainability and bias mitigation and work to design an AI Factory with transparency in mind. Incorporate tools and processes to explain AI outputs and proactively address potential biases in datasets and models.

Investors are going to look for an AI Factory to produce ROI. For this, organizations can demonstrate a clear link between their AI Factory investments and tangible business outcomes (cost savings, revenue growth, risk reduction), while also working to underscore their commitment to ethical and responsible AI use.

An AI Factory approach isn’t a magic solution but a strategic framework. Executives who develop a structured plan for building their AI Factory, one that is responsive to these five forces, will gain trust and secure sustained investment for AI initiatives.

Q10. How has KX developed since August 2022?

Ashok Reddy: Since August 2022, KX has been at the forefront of driving accelerated computing for data and AI-driven analytics, catering specifically to AI-first enterprises. Our focus on strategic partnerships has allowed us to deliver cutting-edge solutions tailored to meet the evolving needs of our customers.

Furthermore, our commitment to innovation and customer-centricity has solidified our position as a trusted partner in the AI-driven analytics space. By aligning our efforts with market demands, we continue to lead the way in delivering transformative solutions that drive success for our customers.

……………………………………….

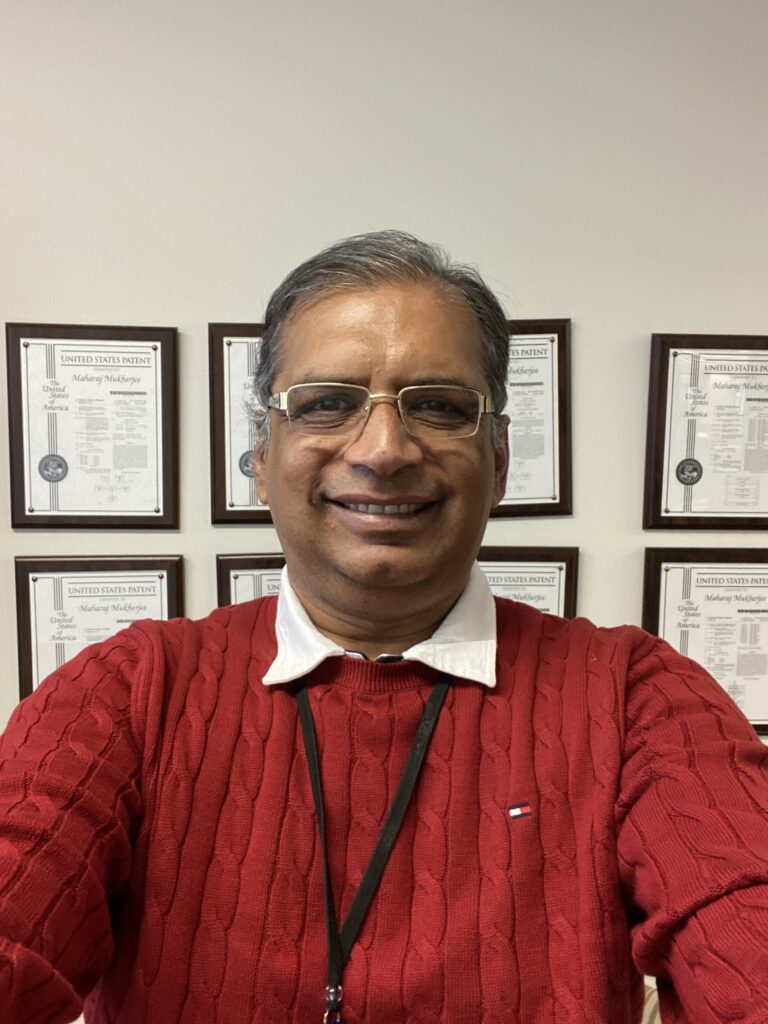

Ashok Reddy, CEO, KX

One of the leading voices in vector databases, search & temporal LLM’s, Ashok KX joined as Chief Executive Officer in August 2022. He has more than 20 years of experience leading teams and driving revenue for Fortune 500 and private equity-backed technology companies. He spent ten years at IBM as Group General Manager where he led the end-to-end delivery of enterprise products and platforms for a diverse portfolio of global customers. In addition, he has held leadership roles at CA Technologies and Broadcom, and worked as a special adviser to digital transformation company Digital.AI where he helped the senior leadership team devise the product and platform vision and strategy.

Resources:

(**) 5 Forces That Will Drive the Adoption of GenAI. Harvard Business Review, Dec 14, 2023.

Related Posts:

On Generative AI. Interview with Maharaj Mukherjee. ODBMS Industry Watch, December 10, 2023.

On Generative AI. Interview with Philippe Kahn. ODBMS Industry Watch, June 19, 2023.

…………………

“Digital ethics must be context specific. To bridge the operationalization gap, we must consider that digital ethics cannot be a one-size fits-all approach.“

Q1. What is your responsibility and your current projects as Director Bioethics & Digital Ethics at Merck KGaA?

Jean Enno Charton: As the Director of Bioethics and Digital Ethics at Merck KGaA, I’m responsible for addressing ethical considerations and questions that arise from rapid advancements in science and technology especially in areas of legal ambiguity and moral complexity. Merck as a leading science and technology company is perpetually at the forefront of pioneering new projects that redefine our offerings and customer interactions. Consequently, my responsibilities and projects are dynamic, evolving in line with Merck’s growth and the ever-changing scientific landscape. Over the recent years I have built up a team as specialists that works as sort of ‘in-house consultancy for ethics.

For example, we have to carefully evaluate which organizations we supply with our life science products, such as genome editing tools, to prevent potential misuse or abuse for unethical purposes. Other recent projects that I am involved in are related to stem cell research, human embryo models, fertility technologies, clinical research, donation programs, and others. My work also includes ensuring data and algorithms at Merck are used in ethically favourable ways that align with our values and principles. Here, we are frequently delving into ethical considerations surrounding AI deployment and data utilization in research and development, data analytics, human resource management and other areas.

In addition, we coordinate with two advisory panels that provide independent external guidance on how to use the scientific and digital innovations developed by Merck KGaA in a responsible manner. These are the Merck Ethics Advisory Panel for Science and Technology (MEAP) and the Digital Ethics Advisory Panel (DEAP). My team and I select the topics and experts for these panels and disseminate their recommendations.

Q2. Recent attempts to develop and apply digital ethics principles to address the challenges of the digital transformation leave organizations with an operationalisation gap. Why?

Jean Enno Charton: The operationalisation gap in digital ethics arises mainly due to the necessarily high level of abstraction of ethical principle frameworks vis-à-vis the granularity needed to answer day-to-day challenges that arise from data and AI use.

Ethical principles are typically formulated at a high level of abstraction. While these principles serve as essential compass points, they lack the specificity needed for practical application. Imagine a map with coordinates indicating the general direction but failing to provide street-level details. Similarly, high level ethical principles guide organizations but fall short when it comes to navigating the intricacies of real-world scenarios.

Moreover, digital ethics must be context specific. To bridge the operationalization gap, we must consider that digital ethics cannot be a one-size fits-all approach. Each organization context – whether it’s healthcare, finance, or human resources – presents unique challenges. In some instances, you may need geopolitical maps and in others you may want geographical maps.

Q3. What are the main challenges in translating high-level ethics frameworks into practical methods and tools that match the organization specific workflows and needs?

Jean Enno Charton: The primary challenge lies in the intellectual capacity required for such translation. In the realm of bioethics and digital health, ethical considerations are intricate and multifaceted, demanding a nuanced approach to each unique scenario.

Organizations must have the capability to create tools and methodologies that are fit for their specific operational needs. Careful planning is essential, entailing a strategic plan of integrating these tools into current systems with minimal interruption and optimal productivity. Furthermore, fostering inter departmental cooperation is crucial to overcome the common challenge of compartmentalization within organizations. Often, the resources necessary for such endeavors are scarce or inadequately allocated.

Additionally, digital ethics presents unique challenges that necessitate a fundamental shift from the traditional model of one-time advisory consultations. The highly automated and dynamic nature of project oversight, coupled with the scale and velocity of data analytics projects, calls for ongoing ethical engagement and innovative approaches to responsibility assignment. In dispersed data analytics teams, attributing ethical accountability is particularly difficult because individual responsibilities can become unclear. Consequently, there is an imperative for developing new methodologies for ethical assessment. These methodologies must be adaptable to the changing landscape and sufficiently nuanced to reflect the complexities of data and AI utilization.

Q3. You helped develop a risk assessment tool called Principle-at-Risk Analysis (PaRA). What is it? And how does it work in practice at Merck KGaA?

Jean Enno Charton: The Principle-at-Risk Analysis (PaRA) is a standardized risk assessment tool developed to bridge high-level ethics frameworks with practical methods and tools that align with specific workflows and needs. At Merck KGaA, PaRA guides and harmonizes the work of the DEAP, ensuring alignment with the company’s Code of Digital Ethics (CoDE).

How does PaRA work?

- Identification: The first step is to identify potential scenarios or decisions in the project seeking guidance from the DEAP where ethical principles could be compromised. These principles include privacy, transparency, fairness, and accountability. At the end of the process, the panel receives a list of potential conflicts between Merck’s CoDE and the project being investigated, enabling a comprehensive review of all relevant ethical concerns.

- Assessment: Once potential risks are identified, they are assessed based on their potential impact on ethical principles. The assessment considers factors such as the severity of the potential harm, the likelihood of occurrence, and the company’s ability to mitigate the risk.

- Mitigation: After assessing the risks, measures are implemented to mitigate or manage them effectively. This may involve adjusting processes, implementing safeguards, or providing additional training and guidance to employees involved in decision-making.

- Monitoring and Review: The PaRA framework emphasizes ongoing monitoring and review of ethical risks to ensure that mitigation measures remain effective. This includes regular audits, feedback mechanisms, and adapting strategies as new risks emerge or circumstances change.

- Integration with Decision-Making: Importantly, the PaRA framework is integrated into the company’s decision-making processes. This ensures that ethical considerations are taken into account when making business decisions, from strategic planning to day-to-day operations.

In practice, we have applied PaRA in various contexts, such as ensuring the comprehensibility of consent forms in data-sharing scenarios at Syntropy, a collaborative technology platform for clinical research. The tool can also be applied across various departments and functions, such as research and development or marketing.

Q4. How can ethics panels make an effective contribution to implementing digital ethics in a commercial organization?

Jean Enno Charton: Ethics panels can play a crucial role in implementing digital ethics in commercial organization by providing expertise, guidance and oversight in navigating complex ethical quandaries associated with digital technologies.

In corporate context of companies like Merck, internal ethics teams may be limited in size, hindering their ability to handle diverse ethical issues effectively. This is where external advisory panels come in by contributing diverse knowledge from technical fields, ethics, sociology, anthropology, and law. This diverse expertise is essential for addressing complex ethical conundrums in areas such as stem cell, patient consent, and AI in HR.

Ethics panels also act impartially in balancing commercial interests and ethical considerations ensuring fair outcomes.

They contribute to developing robust ethical policies and frameworks, drawing from their experience in policy formation, public consultation, or regulatory roles. DEAP, for instance, assisted in developing CoDE, PaRA, among other recent methodological advancements at Merck.

Panels are also well equipped to conduct risk and opportunity assessments to identify potential ethical concerns and prioritize appropriate countermeasures. This approach promotes a more humane technological landscape that aligns opportunities with ethics for a conscientious contribution.

Q5. Merck created a digital ethics panel composed of external experts in digital ethics, law, big data, digital health, data governance and patient advocacy. How do you handle conflict of interests and ensure a neutral approach?

Jean Enno Charton: To address potential conflicts of interests and maintain neutrality, the panel members are required to disclose potential conflicts of interest, affiliations with other organizations, or any other factors that might affect their impartiality. This disclosure is part of an agreement that panel members make with Merck during the recruitment process, ensuring a commitment to good faith actions.

Additionally, Merck employs a transparent selection process for its panel members. Candidates are meticulously chosen through a comprehensive process that prioritizes independence, breadth of expertise that is pertinent to Merck’s diverse portfolio, academic merit, diversity of viewpoints, and the capacity to accurately reflect the views pertinent to specific geographic regions as well as the interests of minority groups that are often underrepresented in ethical assessments.

Furthermore, our Code of Ethics and PaRA standardizes the panel’s activities, providing structured support in the decision-making processes, thereby ensuring the utmost neutrality and integrity in the panel’s operations.

Q6. How do you avoid the risk that an ethics panel will form an isolated entity within the company?

Jean Enno Charton: Avoiding the risk of an ethics panel becoming an isolated entity within a company involves a delicate balance of independence and integration. It is crucial for such a panel to maintain a certain level of detachment to offer unbiased independent opinions. Yet, it’s equally important to prevent the panel from becoming isolated. At Merck, we achieve this balance by fostering a sense of inclusion within both the Merck and scientific communities.

Our panel members, while not Merck employees, often have a long-standing relationship with the company. We ensure they are well informed about Merck’s activities and specific use cases, enabling them to offer valuable insights without internal biases.

To promote transparency, we communicate the panel’s activities, decisions, and recommendations within the company through minutes or other appropriate communication methods. This open communication helps other stakeholders understand and value the panel’s role, mitigating the risk of isolation. It also nurtures a sense of belonging and contribution to the broader scientific endeavor at Merck.

Q7. Can you share some details of a best practice you have developed using the PaRA tool?

Jean Enno Charton: One of the best practices we have developed using PaRA tool involves integrating it with other ethical frameworks, such as CoDE, and collaborating with experts including DEAP and my own team to ensure a comprehensive approach to ethical considerations.

It’s important to note that the PaRA tool doesn’t encompass all ethical aspects. Therefore, revisiting and reassessing the tool’s output is sometimes necessary to identify any overlooked or missed elements. This practice has significantly enhanced our effective utilization.

Q8. What are the main lessons you have learned?

Jean Enno Charton: One of the main lessons I have learned is the importance of scientific rigor in developing and implementing a risk analysis tool like PaRA. While a principled framework provides a foundation, it must be supported by rigorous scientific and ethical analysis to ensure its effectiveness and meaningfulness. This involves conducting thorough research, gathering relevant data, and engaging with experts in various fields to inform the development of the tool.

Another key lesson is the necessity of garnering support from senior leadership for the success of the tool. Without the backing of senior leadership, it would have been challenging – if not impossible – to develop and implement PaRA effectively.

Further, I have learned the need for flexibility and sensitivity to the specific needs of individual departments within the organization. While overarching ethical principles guide the development of the tool, it’s essential to recognize that different departments may face unique challenges and priorities. As such, the tool must be adaptable enough to accommodate these varying needs while upholding ethical standards consistently across the organization. This flexibility ensures that the tool remains relevant and applicable across diverse departments and scenarios.

Q9. How do you make sure that the recommendations developed on the basis of the Principle-at-Risk Analysis are really enforced and not ignored in practice?

Jean Enno Charton: From a governance perspective Our role is advisory rather than decision or enforcement. We aim to convince our collaborators to adopt the recommendations from the panels and implement the principles laid out in the Merck’s Code of Digital Ethics.

Departments and individuals bear the primary responsibility for adhering to these principles. However, we support department leaders in creating mechanisms for effective enforcement.

For instance, we have created a handbook-style self-assessment tool for project managers working with generative AI to identify and mitigate ethical risks during project development. Additionally, we have embedded semi-automated ethics assessment processes into existing project management structures within data analytics.

We also actively engage with department leaders to encourage follow-up and implementation of recommendations to ensure accountability and transparency. When recommendations are not feasible due to various constraints, we communicate the constraints to the ethics panel, valuing their input while outlining implementation barriers.

Q10. What are the challenges and limitations of such an approach?

Jean Enno Charton: A significant challenge of this approach is the voluntary nature of compliance. Unlike traditional compliance departments that enforce rules and regulations, we rely on voluntary adherence to ethical principles. While the voluntary approach elevates ethics to a higher moral standard, it also means that there’s no direct mechanism for enforcement. This voluntary aspect can pose challenges in ensuring consistent adherence across all departments and levels of the organization. This has also to do with the culture and code of conduct we live at Merck – a still largely family-owned company with a long-term, generationally-thinking approach on doing responsible business.

Qx Anything else you wish to add?

Jean Enno Charton: (WHAT’S THE BIG TAKEAWAY)

…………………………………..

Dr. Jean Enno Charton, Director Bioethics & Digital Ethics , Merck KGaA

Dr. Jean Enno Charton is Director Digital Ethics & Bioethics at Merck. After a brief stint in the biotech industry, he has been with Merck since 2014 – first in the Research & Development department of the Healthcare division (Medical Affairs), later as Chief of Staff of the Chief Medical Officer Healthcare. Since 2019, he has built up the independent Digital Ethics & Bioethics department and is responsible for the topic across all divisions.

Jean Enno Charton studied biochemistry at the University of Tübingen and obtained his doctorate in cancer research at the University of Lausanne; his research experience includes stays at the Canadian Science Center for Human and Animal Health and Harvard Medical School.

Related Posts

On Generative AI. Interview with Philippe Kahn. ODBMS Industry Watch, June 19, 2023

………………………………………..

“Open source is reshaping the technological landscape, and this holds particularly true for AI applications. As we progress into AI, we will witness the proliferation of open-source systems, from large language models to advanced AI algorithms and improved database systems.“

Q1. What is your definition of a Vector Database?

Charles Xie: A vector database is a cutting-edge data infrastructure designed to manage unstructured data. When we refer to unstructured data, we specifically mean content like images, videos, and natural language. Using deep learning algorithms, this data can be transformed into a novel form that encapsulates its semantic representation. These representations, commonly known as vector embeddings or vectors, signify the semantic essence of the data. Once these vector embeddings are generated, we store them within a vector database, empowering us to perform semantic queries on the data. This capability is potent because, unlike traditional keyword-based searches, it allows us to delve into the semantics of unstructured data, such as images, videos, and textual content, offering a more nuanced and contextually rich search experience.

Q2. Currently, there are a multitude of vector databases on the market. Why do they come in so many versions?

Charles Xie: When examining vector database systems, disparities emerge. Some, like Chroma, adopt an embedded system approach akin to SQLite, offering simplicity but lacking essential functionalities like scalability. Conversely, systems like PG Vector and Pinecone pursue a scale-up approach, excelling in single-node instances but limiting scalability.

As a seasoned database engineer with over two decades of experience, I stress the complexity inherent in database systems. A systematic approach is vital when assessing these systems, encompassing components like storage layers, storage formats, data orchestration layers, query optimizers, and execution engines. Considering the rise of heterogeneous architectures, the latter must be adaptable across diverse hardware, from modern CPUs to GPUs.

From its inception, Milvus has embraced heterogeneous computing, efficiently running on various modern processors like Intel and AMD CPUs, ARM CPUs, and Nvidia GPUs. The integration extends to supporting vector processing AI processes. The challenge lies in tailoring algorithms and execution engines to each processor’s characteristics, ensuring optimal performance. Scalability, inevitable as data grows, is a crucial consideration addressed by Milvus, supporting both scale-up and scale-out scenarios.

As the vector database gains prominence, its appeal to vendors stems from its potential to reshape data management. Therefore, transitioning to a vector database necessitates evaluating its criticality to business functions and anticipating data volume growth. Milvus stands out for both scenarios, offering consistent, optimal performance for mission-critical services and remarkable cost-effectiveness as data scales.

Q3. In your opinion when does it make sense to transition to a pure vector database? And when not?

Charles Xie: Now, let’s delve into the considerations for transitioning to a pure vector database. It’s crucial to clarify that a pure vector database isn’t merely a traditional database with a vector plugin; it’s a purposefully designed solution for handling vector embeddings.

There are two key factors to weigh. Firstly, assess whether vector computing and similarity search are critical to your business. For instance, if you’re constructing a RAG solution integral to millions of users daily and forming the core of your business, the performance of vector computing becomes paramount. In such a situation, opting for a pure vector database system is advisable. It ensures consistent, optimal performance that aligns with your SLA requirements, especially for mission-critical services where performance is non-negotiable. Choosing a vector database system guarantees a robust foundation, shielding you from unforeseen surprises in your regular database services.

The second crucial consideration is the inevitable increase in data volume over time. As your service runs for an extended period, the likelihood of accumulating larger datasets grows. With the continuous expansion of data, cost optimization becomes an inevitable concern. Most pure vector database systems on the market, including Milvus, deliver superior performance while requiring fewer resources, making them highly cost-effective.

As your data volume escalates, optimizing costs becomes a priority. It’s common to observe that the bills for vector database services grow substantially with the expanding dataset. In this context, Milvus stands out, showcasing over 100 times more cost-effectiveness than alternatives such as PG Vector, OpenSearch, and other non-native web database solutions. The cost-effectiveness of Milvus becomes increasingly advantageous as your data scales, making it a strategic choice for sustainable and efficient operations.

Q4. What is the initial feedback from users of Vector Databases?

Charles Xie: Reflecting on our beginnings six years ago, we focused primarily on catering to enterprise users. At the time, we engaged with numerous users involved in recommendation systems, e-commerce, and image recognition. Collaborations with traditional AI companies working on natural language processing, especially when dealing with substantial datasets, provided valuable insights.

The predominant feedback we received emphasized the enterprise sector’s specific needs. These users, being enterprises, possessed extensive datasets and a cadre of proficient developers. They emphasized deploying a highly available and performant vector database system in a production environment, a requirement often seen in large enterprises where AI was gaining traction.

It’s important to note that independent AI developers were not as prevalent during that period. AI, being predominantly in the hands of hyper-scalers and large enterprises, meant that the cost of developing AI algorithms and applications was considerably high. Around six years ago, hyper-scalers and large enterprises were the primary users of vector database systems, given their capacity to afford dedicated teams of AI developers and engineers. This context laid the foundation for our initial focus and direction.

In the last two years, we’ve witnessed a remarkable shift in the landscape of AI, marked by the breakthrough of modern AI, particularly the prominence of large language models. Notably, there has been a significant surge in independent AI developers, with the majority comprising teams of fewer than five individuals. This starkly contrasts the scenario six years ago when the AI development scene was dominated by large enterprises capable of assembling teams of tens of engineers, often including a cadre of computer science PhDs, to drive AI application development.

The transformation is striking—what was once the exclusive realm of well-funded enterprises can now be undertaken by small teams or even individual developers. This democratization of AI applications marks a fundamental shift in accessibility and opportunities within the AI space.

Q5. Will semantic search be performed in the future by ChatGPT instead of using vectors and a K-nearest neighbor search?

Charles Xie: Indeed, the foundation models we encounter, such as Chat GPT and vector databases, share a common theoretical underpinning—the embedding vector abstraction. Both Chat GPT and vector database systems leverage embedding vectors to encapsulate the semantic essence of the underlying unstructured data. This shared data abstraction allows them to make sense of the information and perform queries effectively. Across large language models, AI models, and vector database systems, a profound connection exists rooted in the utilization of the same data abstraction—embedding vectors.

This connection extends further as they employ identical metrics, primarily relying on distance metrics like Euclidean or cosine distance. Whether within Chat GPT or other large language models, using consistent metrics facilitates the measurement of similarities among vector embeddings.

Theoretically, a profound connection exists between large language models like Chat GPT and various vector databases, stemming from their shared use of embedding vector abstraction. The workload division between them becomes apparent—they both excel at performing semantic and k-nearest neighbor searches. However, the noteworthy distinction lies in the cost efficiency of these operations.

While large language models and vector databases tackle the same tasks, the cost disparity is significant. Executing semantic search and k-nearest neighbor search in a vector database system proves to be approximately 100 times more cost-effective than carrying out these operations within a large language model. This substantial cost difference prompts many leading AI companies, including OpenAI, to advocate for using vector databases in AI applications for semantic search and k-nearest neighbor search due to their superior cost-effectiveness.

Q6. There seems to be a need from enterprises to have a unified data management system that can support different workloads and different applications. Is this doable in practice? If not, is there a risk of fragmentations of various database offerings?

Charles Xie: No, I don’t think so. To illustrate my point, let’s consider the automobile industry. Can you envision a world where a single vehicle serves as an SUV, sedan, truck, and school bus all at once? This has yet to happen in the last 100 years of the automobile industry, and if anything, the industry will be even more diversified in the next 100 years.

It all started with the Model T; from this, we witnessed the birth of a great variety of automobiles commercialized for different purposes. On the road, we see lots of differences between SUVs, trucks, sports cars, and sedans, to name a few. A closer look at all these automobiles reveals that they are specialized and designed for specific situations.

For instance, SUVs and sedans are designed for family use, but their chassis and suspension systems are entirely different. SUVs typically have a higher chassis and a more advanced suspension system, allowing them to navigate obstacles more easily. On the other hand, sedans, designed for urban areas and high-speed driving on highways, have a lower chassis for a more comfortable driving experience. Each design serves a specific goal.

Looking at all these database systems, we see that many design goals contradict each other. It’s challenging, if not impossible, to optimize a design to meet all these diverse requirements. Therefore, the future of database systems lies in developing more purpose-built and specialized ones.

This trend is already evident in the past 20 years. Initially, we had traditional relational database systems. Still, over time, we witnessed the emergence of big data solutions, the rise of NoSQL databases, the development of time series database systems, graph database systems, document database systems, and now, the ascent of vector database systems.

On the other hand, certain vendors might have an opportunity to provide a unified interface or SDK to access various underlying database systems—from vector databases to traditional relational database systems. There could be a possibility of having a unified interface.

At Milvus, we are actively working on this concept. In the next stage, we aim to develop an SQL-like interface tailored for vector similarity search in vector databases. We aim to incorporate vector database functionality under the same interface as traditional SQL, providing a unified experience.

Q7. What does the future hold for Vector databases?

Charles Xie: Indeed, we are poised to witness an expansion in the functionalities offered by vector database systems. In the past few years, these systems primarily focused on providing a single functionality: approximate nearest neighbor search (ANN search). However, the landscape is evolving, and in the next two years, we will see a broader array of functionalities.

Traditionally, vector databases supported similarity-based search. Now, they are extending their capabilities to include exact search or matching. You can analyze your data through two lenses: a similarity search for a broader understanding and an exact search for detailed insights. By combining these two approaches, users can fine-tune the balance between obtaining a high-level overview and delving into specific details.

Obtaining a sketch of the data might be sufficient for certain situations, and a semantic-based search works well. On the other hand, in situations where minute differences matter, users can zoom in on the data and scrutinize each entry for subtle features.

Vector databases will likely support additional vector computing workloads, such as vector clustering and classification. These functionalities are particularly relevant in applications like fraud detection and anomaly detection, where unsupervised learning techniques can be applied to cluster or classify vector embeddings, identifying common patterns.

Q8. And how do you believe the market for open source Vector databases will evolve?

Charles Xie: Open source is reshaping the technological landscape, and this holds particularly true for AI applications. As we progress into AI, we will witness the proliferation of open-source systems, from large language models to advanced AI algorithms and improved database systems. The significance of open source extends beyond mere technological innovation; it exerts a profound impact on our world’s social and economic fabric. In the era of modern AI, with the dominance of large language models, open-source models and open-source vector databases are positioned to emerge victorious, shaping the future of technology and its societal implications.

Q9. In conclusion, are Vector databases transforming the general landscape, not just AI?

Charles Xie: Indeed, vector databases represent a revolutionary technology poised to redefine how humanity perceives and processes data. They are the key to unlocking the vast troves of unstructured data that constitute over 80% of the world’s data. The promise of vector database technology lies in its ability to unleash the hidden value within unstructured data, paving the way for transformative advancements in our understanding and utilization of information.

………………………………………………..

Charles Xie is the founder and CEO of Zilliz, focusing on building next-generation databases and search technologies for AI and LLMs applications. At Zilliz, he also invented Milvus, the world’s most popular open-source vector database for production-ready AI. He is currently a board member of LF AI & Data Foundation and served as the board’s chairperson in 2020 and 2021. Charles previously worked at Oracle as a founding engineer of the Oracle 12c cloud database project. Charles holds a master’s degree in computer science from the University of Wisconsin-Madison.

Related Posts

On Zilliz Cloud, a Fully Managed AI-Native Vector Database. Q&A with James Luan. ODBMS.org,JUNE 15, 2023

On Vector Databases and Gen AI. Q&A with Frank Liu. ODBMS.org, DECEMBER 8, 2023

“ Five years from now, today’s AI systems will look archaic to us. In the same way that computers of the 60s look archaic to us today. What will happen with AI is that it will scale and therefore become simpler, and more intuitive. And if you think about it, scaling AI is the best way to make it more democratic, more accessible.“

Q1. What are the innovations that most surprised you in 2023?

Raj Verma: Generative AI is definitely the talk of the town right now. 2023 marked its breakthrough, and I think the hype around it is well founded. Few people knew what generative AI was before 2023. Now everyone’s talking about it and using it. So I was quite impressed by the takeup of this new technology.

But if we go deeper, we have to acknowledge that the rise of AI would not have been possible without significant advancements in how large amounts of data are stored and handled. Data is the core of AI and what is used to train LLMs. Without data, AI is useless. To have powerful generative AI that gives you answers, predictions and content right at the moment you need it, you need real-time data, or data that is fresh, in motion and delivered in a matter of milliseconds. The interpretation and categorization of data are therefore crucial in powering LLMs and AI systems.

In that sense, you will notice a lot of hype around Specialized Vector Databases (SVDB), which are independent systems that you plug into your data architecture designed to store, index and retrieve vectors, or multidimensional data points. These are popular because LLMs are increasingly relying on vector data. Think of vectors as an image or a text converted into a stored data point. When you prompt an AI system, it will look for similarities in those stored data points, or vectors, to give you an answer. So vectors are really important for AI systems and businesses often believe that a database focused on just storing and processing vector data is essential for AI systems.

However, you don’t really need SVDBs to power your AI applications. In fact, loads of companies have come to regret their use because, as an independent system, they result in redundant data, excessive data movement, increasing labor and licensing costs and limited query power.

The solution is to store all your data — structured data, semi-structured data based on JSON, time-series, full-text, spatial, key-value and vector data — in one database. And within this system have a powerful vector database functionality that you can leverage to conduct vector similarity search.

All this to say that, I’ve been impressed at the speed in which we are developing ways to power generative AI. We’re experimenting based on its needs and quickly figuring out what works and doesn’t work.

Q2. What is real-time data and why is it essential for AI?

Raj Verma: Real time is about what we experience in the now. It is access to the information you need, at the moment you need it, delivered together with the exact context you need to make the best decision. To experience this now, you need real-time data — data that is fresh and in motion. And with AI, the need for real-time data — fast, updated and accurate data — is becoming more apparent. Because without data, AI is useless. And when AI models are trained on outdated or stale data, you get things like AI bias or hallucinations. So, in order to have AI that is powerful, and that can really help us make better choices, we need real time data.

With the use of generative AI expanding beyond the tech industry, the need for real-time data is more urgent than ever. This is why it is important to have databases that can handle storage, access and contextualization of information. At SingleStore, our vision is that databases should support both transactional (OLTP) and analytical (OLAP) workloads, so that you can transact without moving data and put it in the right context — all of which can be delivered in millisecond response times.

Q3. One of the biggest concerns around AI is bias, the idea that existing prejudices in the data used to train AI might creep into its decisions, content and predictions. What can we do to mitigate this risk?

Raj Verma: I believe humans should always be involved in the training process. With AI, we must be both student and teacher, allowing it to learn from us, and in that way continuously give it input so that it can give us the insight we need. There are many laudable efforts to develop Hybrid Human AI models, which basically incorporate human insight with machine learning. Examples of hybrid AI include systems in which humans monitor AI processes through auditing or verification. Hybrid models can help businesses in several ways. For example, while AI can analyze consumer data and preferences, humans can jump in to guide how it uses that insight to create relevant and engaging content.

As developers, we must also be very cognizant of where the data used to train LLMs comes from. And in this sense, being transparent about where it comes from helps, because the systems can be held accountable and challenged if biased data does creep into the training process. The important thing here is also to know that an AI system is only as good as the data that is trained on.

Q4. The popularity and accessibility of generative artificial intelligence (gen AI) has made it feel like the future we see in science fiction movies is finally at our doorstep. And those science fiction movies have sowed much worry about AI being dangerous. Is this Science fiction vision of AI becoming true?

Raj Verma: Don’t expect machines to take over the world, at least not any time soon. AI can process and analyze large amounts of data and generate content based on that, at a much faster pace than we humans can. But they are still very dependent on human input. The idea that human-like robots will come to rule the world makes for great fiction movies, but it’s far from becoming a reality.

That doesn’t mean that AI isn’t dangerous — and we have a responsibility to discern discerning which threats are real.

AI poses an unprecedented risk in fueling the spread of disinformation because it has the capacity to create authentic looking content. Distinguishing between content generated by AI and that created by humans will become increasingly challenging. AI can also pose cybersecurity threats. You can trick ChatGPT into writing malicious code, or use other generative AI systems to enhance ransomware. And AI can worsen current malicious trends that have surfaced with social media. I personally worry that AI systems will exploit the attention economy and spur higher levels of social media addiction. This can have terrible consequences on teenagers’ mental health. As a father of two, I am deeply concerned about this.

These are the threats that we should worry about. And we humans are capable of mitigating these risks. We should always be involved in AI’s development, audit it and pay special attention to the data that we use to train it.

Q5. You are quoted saying that ” without data, AI wouldn’t exist—but with bad or incorrect data, it can be dangerous.” How dangerous can AI be?

Raj Verma: Generative AI is like a superhuman who reads an entire library of thousands of books to answer your question, all in a matter of seconds. If it doesn’t have access to that library, and if that library doesn’t have the latest books, magazines and newspapers, then it cannot give you the most relevant information you need to make the best decision possible. This is a very simple explanation of why, without data, AI is useless. Now imagine that library is full of outdated books that were written by white supremacists during the civil war. The information you are going to get from this AI system is going to guide your decisions, and you are going to make some very bad decisions. You are going to make biased decisions, and you’re going to perpetuate biases that already exist in society. That’s how AI can be dangerous, and that is why we need AI systems to have access to the most updated, accurate data out there.

Q6. Should AI be Regulated? And if yes, what kind of regulation?

Raj Verma: The issue is, it’s hard to regulate something that is still developing. We just don’t know what AI will look like, in its entirety, in the future. So we want to avoid regulation hampering the development of this technology. That doesn’t mean that there aren’t standards that can be applied globally. Data regulation is key, since data is the backbone of AI. Data regulation can be based on the principle of transparency, which is key to generate trust in AI and our ability to hold this technology and its developers accountable should something go wrong. To achieve transparency you need to know where the data in the AI system is coming from. So, proper documentation of the data used to train LLMs is something we can regulate. You also must be able to explain the reasoning behind an AI system’s solutions or decisions. These must be understandable by humans. And there’s also transparency in how you present the AI system to users. Do users know that they are talking to an AI robot and not a human? We can regulate data transparency without imposing excessive measures that could hamper AI’s development.

Q7. There is no global approach on AI regulation. Several Countries in the world are in various stages of evolving their approach to regulating AI. What are the practical consequences of this?

Raj Verma: A global scale regulation of AI is incredibly challenging. Each country’s social values will be reflected in the way they approach regulating this new technology. The EU has a very strong approach to consumer protection and privacy, which is probably why it authored the first significant widespread attempt to regulate AI in the world. I don’t believe we will see such a wide sweeping legislation in the US, a country that values innovation and market dynamics. The US, we will see a decentralized approach to regulation, with maybe some specific decrees that seek to regulate its use in specific industries, like healthcare or finance.

Many worry that the EUs new AI act will become another poster child of the Brussels effect, where firms end up adopting the EU’s regulation, in absence of any other, because it saves costs. Yet the Brussels effect might not exactly happen with the AI act, particularly because firms might want to use different algorithms in the first place. For example, marketing companies will want to use different algorithms for different geographic areas because consumers behave differently depending on where they live. It won’t be hard then for firms to have their different algorithms comply with different rules in different regions.

All this to say that we should expect different AI regimes around the world. Companies should prepare for that. AI trade friction with Europe is likely to emerge, and private companies will advance their own “responsible AI” initiatives as they face a fragmented global AI regulatory landscape.

Q8. How can we improve the way we gather data to feed LLMs?

Raj Verma: We need to make sure LLMs are up to date. Open source LLMs that are trained on large, publicly available data are prone to hallucinate because at least part of their data is outdated and probably biased. There are ways to fix this problem, including Retrieval Augmented Generation (RAG), which is a technique that uses a program to retrieve contextual information from outside the model, immediately feeding it to the AI system. Think of it as an open book test where the AI model, with the help of a program (the book), can look up information specific to the question it is being asked about. This is a very cost effective way of updating LLMs because you don’t need to retrain it all the time and can use it in case-specific prompts.

RAG is central to how we at SingleStore are bringing LLMs to date. To curate data in real time, it needs to be stored as vectors, which SingleStore allows users to do. That way you can join all kinds of data and deliver the specific data you need in a matter of milliseconds.

Q9. What is the evolutionary path you think AI will go through? When we look back 5-10 years from now, how will we look at genAI systems like ChatGPT?

Raj Verma: Five years from now, today’s AI systems will look archaic to us. In the same way that computers of the 60s look archaic to us today. What will happen with AI is that it will scale and therefore become simpler, and more intuitive. And if you think about it, scaling AI is the best way to make it more democratic, more accessible. That is the challenge we have in front of us, scaling AI, so that it works seamlessly in giving us the exact insight we need to improve our choices. I believe this scaling process should revolve around information, context and choice, what I call the trinity of intelligence. These are the three tenets that differentiate AI from previous groundbreaking technologies. They are also what help us experience the now in a way that we are empowered to make the best choices. Because this is our vision at SingleStore, we focus on developing a multi-generational platform which you can use to transact and reason with data in millisecond response times. We believe this is the way to make AI more powerful because with more precise databases that can deliver information in real time, we can power the AI systems that will really help us make the best choices as humans.

………………………………………..

Raj Verma is the CEO of SingleStore.

He brings more than 25 years of global experience in enterprise software and operating at scale. Raj was instrumental in the growth of TIBCO software to over $1 billion in revenue, serving as CMO, EVP Global Sales, and COO. He was also formerly COO at Apttus Software and Hortonworks. Raj earned his bachelor’s degree in Computer Science from BMS College of Engineering in Bangalore, India.

Related Posts

Achieving Scale Through Simplicity + the Future of AI. Raj Verma. October 31st, 2023

On Generative AI. Interview with Maharaj Mukherjee. ODBMS Industry Watch, December 10, 2023

On Generative AI and Databases. Interview with Adam Prout. ODBMS Industry Watch, October 9, 2023

__________________

“Managing changes is one of the dimensions that an organization may adapt to for reaping the complete benefits out of Generative AI. It need to manage the change, adaptation and redeployment easy on its workforce so that people do not feel threatened by this new technology.”

Q1. Generative AI applications like ChatGPT, DALL-E, Stable Diffusion and others are said to rapidly democratizing the technology in business and society. Is this really happening ?

Mukherjee: Democratizing in the AI/ML area has been happening for some time. It is a slow evolutionary process. It started with the advent of auto ML tools whereby the model building moved very quickly from the expertise of data scientists to the purview of any one with a touch of a button. But except for some areas such as face recognition, etc., it has been out of reach for most people. Now with the coming of generative AI with large foundational models and large language models the doors have been opened to the public to experiment with AI in all different ways. But I do not think the ball will stop here and we are yet to see all the many ways people can make use of these new sets of tools that have suddenly become available to them. These use cases will now drive the development of newer types of research and innovation in the AI field.

Q2. What industries do you believe will be most impacted by LLMs and Generative AI? Why?

Mukherjee: In my humble opinion, the arts and entertainment industry as well as the advertisement industry would be the first adaptors of this technology and that is already happening. It will be happening slowly in the area that require more specialized knowledge such as in the Science, Technology, and Engineering and often more regulated such as healthcare and pharmaceutical industries.

Q3. What kind infrastructure will be essential for deploying generative AI?

Mukherjee: The current barrier to the early adaptation for any small industry are two folds. One is the availability of specialized hardware such as GPUs and the next is availability of quality data scientists and programmers who can make use of the best-known algorithms and best available hardware to make it work. These two limitations are keeping these technologies out of reach for most companies except for a few very large organizations. But I would think that it is a matter of time and with improvements of scales things will be within the reach of almost every organization.

Q4. How will companies prevent the breach of third-party copyright in using pre-trained foundation models?

Mukherjee: That is a main concern for existing LLM and Gen-AI models. However, many organizations are already in the process of building models based on Retrieval Augmented Generation (RAG) which can take care of copyright violation and other ethical and legal issues. Another way to handle such violations is tagging the generation and retrieval to the original source by keeping a record of all intermediate steps using methodologies such as block chain.

Q5. What about trust in generative AI? How can you ensure the accuracy of generative AI outputs and maintain user confidence?

Mukherjee: A model is always as good as the results it generates. The problem of errors is not new to the area of AI and ML and just calling it using some anthropomorphic terms such as “Hallucinations” does not in any case make it different. Often errors are introduced as a safety measure as a bias in the model. As more and more people get used to these fundamental limitations of AI, people will adjust their expectations and find how the best way to use these models.

Q6. LLMs may generate algorithmic bias due to imperfect training data or decisions implicitly or explicitly made by the engineers developing the models. What is your take on this?

Mukherjee: Quality of data has always been an issue with any AI/ML models, and it is not very different in the age of generative AI and LLM. It is always the case of “Garbage In – Garbage Out”. In traditional AI/ML model developments engineers have been culling and engineering data to suit their goal and need. But often the engineering bias sips in how the data is selected and consequently some human biases are introduced in the model. In the realm of Generative AI the philosophy is slightly different from traditional AI. Since it is built based on any and all kinds of data – the initial data bias may not be an issue here. However, we still need to make sure that the output follows our societal norms and principles and also not harmful in general.

Q7. Will change management be critical to implementing generative AI?

Mukherjee: Managing changes is one of the dimensions that an organization may adapt to for reaping the complete benefits out of Generative AI. It need to manage the change, adaptation and redeployment easy on its workforce so that people do not feel threatened by this new technology.

Q8. How is AI regulation going to have an impact when it comes to harnessing the opportunities of generative AI?

Mukherjee: As with any human technology Generative AI needs to conform to our accepted societal principles, norms, and moralities. If the technologist and the industry cannot regulate themselves, there is a risk that the regulations may be imposed upon them by outsiders who may not have as much knowledge and understanding of the technology. It is better, therefore that developers of Gen AI step back and spend some time figuring out how to do that and impose certain standard checks and balances upon themselves.

Q10. If it were up to you would you use generative AIi in mission-critical applications?

Mukherjee: I would repeat my thoughts as before that Gen AI is not fundamentally different from traditional AI. Any area where people have used any traditional AI in the past may consider adapting to Generative AI or at least explore the options as a possibility.

……………………………………….

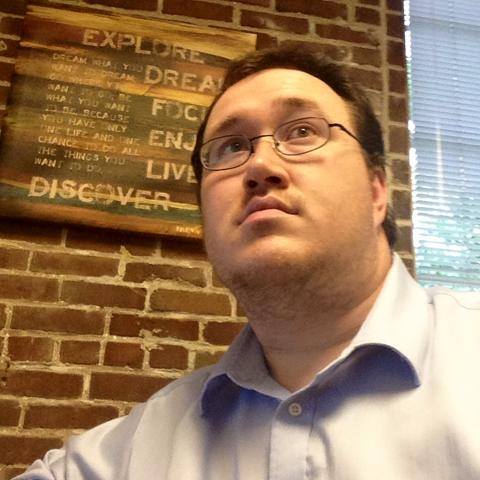

Maharaj Mukherjee, Senior Vice President and Senior Architect Lead, Bank of America

Well recognized expert in cutting edge technologies including Edge and Massively Distributed Computing, Artificial Intelligence and Machine Learning, Cognitive Deep Learning, Blockchain, and Internet-of-Things. Currently working in the Bank of America as Senior Vice President and Senior Architect Lead in the Technology Infrastructure Organization. Previously worked as Senior AI/ML Architect and SVP at the Employee Experience Technology within Bank of America. Before Bank of America Maharaj Mukherjee worked for twenty years in the IBM Research in various leading-edge technologies including Shape Processing Engine, Computational Lithography, Design for Manufacturing, Deep and Cognitive Machine Learning, and Watson Internet of Things. Maharaj Mukherjee is an IBM Master Inventor Emeritus and holds 162 US patents and 160 International Patents to his credit. He is also recognized as a top inventor in the Bank of America in 2020 and 2021. He received the Platinum Award from the Bank of America for being one of the top three inventors in 2021. He was also recognized by IBM for the “Twenty Patents from the Past Twenty years” in 2015. He was inducted in IBM’s Inventor Wall of Fame in 2011.

He holds a PhD from Rensselaer Polytechnic Institute, an MS from SUNY Stony Brook, and B-Tech (Hons.) from Indian Institute of Technology, Kharagpur.

He currently serves as a member of the Institute of Electrical and Electronics Engineers (IEEE) USA Awards Committee as well as a member of the IEEE Region 1 Awards Committee. He is also the current chair of Central Area of IEEE USA.

Related Posts

On Generative AI and Databases. Interview with Adam Prout, ODBMS Industry Watch, October 9, 2023

On Generative AI. Interview with Philippe Kahn, ODBMS Industry Watch, June 19, 2023

Follow us on X: @odbmsorg

” With GenAI also requiring massive amounts of training data, the need for greater storage capacity is crucial. Databases are designed to scale as data volumes grow, ensuring generative AI projects can handle larger datasets as they become available. This means databases can help support the growing demand for AI capabilities across the business world. “

Q1. How is Generative AI transforming the way we store, structure, and query data?

Adam Prout: The focus of generative AI is to create new data, such as texts and images. At its core, GenAI is made of neural networks, a subset of machine learning that handles unstructured data like text, audio, images, and videos. These networks consist of connected layers that learn from training data and identify patterns to make new instances. But it’s not creating copies of the existing instance in the data set. Instead, these networks develop unique data points based on the training data. As a result of increased computational power and the massive amounts of data produced in recent years, it has paved the way for generative AI.

Due to advancements in GenAI, many organizations are exploring the ways that the technology can increase efficiencies in their operations. For example, generative AI can help data analysts find hidden patterns in data sets, deriving actionable insights faster than a human could. In other instances, data augmentation helps organizations generate more data to train neural networks. Models like generative adversarial networks (GANs) can learn the distribution of original data, augment it, and create synthetic data to diversify training datasets for machine learning models. Likewise, content creation is a significant use case for generative AI as organizations can create reports, summaries, and other deliverables using proprietary data at a rapid speed.

As for query data, we can ask questions of our data in natural language, creating efficiency over writing an SQL query or doing a full text search. More data is being stored in vector embeddings in databases, and looked up via Approximate Nearest Neighbor (ANN) vector searches, as a result of GenAI.

There are many more ways that generative AI helps organizations better leverage their existing data while generating original instances. We’ll continue to discover how generative AI can transform the way we store, structure, and query data for years to come.

Q2. Generative AI relies on large amounts of data to generate human-like answers. Among the challenges faced by generative AI are Data Quality and Quantity. How can a database help here?

Adam Prout: Databases provide a structured framework for data storage, allowing organizations to implement routine data quality checks and validation rules to ensure models are only trained on high-quality information. Another advantage of using a database is the consistent maintenance of data through cleansing and enrichment tools. These processes remove inconsistencies, duplicates, and errors from the data, leading to better model training and improved generative AI outputs.

With GenAI also requiring massive amounts of training data, the need for greater storage capacity is crucial. Databases are designed to scale as data volumes grow, ensuring generative AI projects can handle larger datasets as they become available. This means databases can help support the growing demand for AI capabilities across the business world.

Q3. Unlike traditional AI workloads that require additional specialized skills, new Generative AI workloads are available to a larger segment of the developer community. What does it mean in practice?

Adam Prout: This is great news for the practice. More software developers are able to leverage generative AI tools to increase efficiency and solve simple, clearly defined problems. And with a growing number of advanced AI code-generation tools on the market, developers can experiment with these technologies to create artificial data and test their code.

It’s no surprise that developers will play a key role in the GenAI revolution. Their expertise and skill sets are vital to improving the performance of AI and machine learning models. They’ll be able to successfully pivot to focusing on AI development as the need for AI/ML skills skyrockets.

Q4. Generative AI: How to Choose the Optimal Database?

Adam Prout: When selecting the right database for AI and machine learning models, organizations need to take into account several considerations:

- Speed of data processing: The ability to handle large volumes of data while processing information quickly can help organizations gain real-time insights to drive decision-making. This is especially true when working with streaming data or developing applications that require quick response times such as fraud detection or recommendation systems. A database built on a distributed architecture and in-memory data story can enable data processing at lightning-fast speed, helping organizations make fast and informed decisions.

- Vector search: The way vector searches handle high-dimensional data and provide advanced search and similarity capabilities helps organizations simplify data management processes. A vector search categorizes data based on multiple features, allowing organizations to store and search high-dimensional vectors efficiently. This capability helps organizations build more accurate and effective machine learning models as it filters comprehensive datasets into the systems.

- Scalability and integration: As AI requires more computing power and training data, selecting a database becomes even more important to help organizations build out their capabilities. Massive AI projects need a database that can handle complex queries at scale while helping extract and transform data to train AI/ML platforms. A highly scalable database can help companies meet increasing demands for AI-powered workloads. General purpose databases are flexible enough to handle a wide swath of data.

- Real-time analytics capabilities: Databases with built-in analytics capabilities can help organizations quickly identify trends and patterns in their data to make more informed and instantaneous decisions. The ability to run analytical queries paired with transactional ones in the same database system, known as hybrid transactional/analytical processing (HTAP), can eliminate the need for separate systems to complete tasks — simplifying the data architecture and reducing costs. This also offers greater flexibility as organizations look to adopt more AI capabilities into their operations.

Q5. Are NoSQL databases better suited for Generative AI than SQL databases?

Adam Prout: NoSQL and SQL databases each have their own strengths and weaknesses, and which one works best for Generative AI depends on what your project needs. NoSQL databases inherently come with more flexibility when it comes to handling unstructured or semi-structured data, which can be beneficial for certain types of data used in Generative AI – think text, images, and sensor data. As for SQL databases, they provide powerful query capabilities, enabling IT leaders to perform complex data retrieval and analysis.

To put it simply, many GenAI projects use a combination of both types of databases, leveraging the strengths of each. When choosing which database to utilize, it’s critical to evaluate the needs and constraints of your project.

Q6. Some SQL databases do have some features that make them compatible with Generative AI, such as supporting JSON data and functions. Are they suited for Generative AI?

Adam Prout: SQL databases that support features, like JSON, can be well-suited for certain aspects of Generative AI, largely when dealing with flexible or semi-structured data formats. Some benefits these features provide are JSON support, schema flexibility, data integration, complex querying, and scalability.

However, depending on the nature of one’s data – the volume and the complexity – a combination of SQL databases with NoSQL databases may also be a suitable solution. There isn’t a “one-size-fits-all” approach, and to ensure you’re best aligning with your project’s needs and constraints, it’s important to evaluate the end goal that is wanting to be achieved by this particular project.

Q7. Are databases with vector support the bridge between LLMs and enterprise gen AI apps? Why?

Adam Prout: Databases that include vector support can most definitely play a crucial role when it comes to bridging the gap between LLMs and enterprise Generative AI applications for many reasons:

- Easier storage and retrieval of embeddings: LLMs, like ChatGPT, generate word embeddings or vector representations of text data – meaning it’s not only designed to efficiently store embeddings but also to retrieve them, making it easier to manage and query.

- Quick and accurate similarity searches: Vector searches reign supreme when it comes to performing similarity searches, and in the context of Generative AI, this is very valuable, as it enables applications to find similar documents or content quickly.

- Scalability: Scalability is crucial for enterprise applications that need to process vast amounts of data, especially as LLMs continue to produce substantial volumes of vector data. Vector search are purpose-built to efficiently manage large-scale vector data, making them a vital component in handling such demands.

- Real-time applications: Various enterprise Generative AI applications like chatbots, sentiment analysis, and content generation, require real-time processing. Vector enables real-time retrieval and analysis of vector data – increasing the necessary responsiveness of applications.

Q8. Will vector databases be the essential infrastructure in bringing about the societal and economic changes promised by AI?

Adam Prout: Firstly, I want to clarify my thoughts on the term “vector database.” To SingleStore, vector search is a capability of a database, not a new category of database. That being said, databases that support vector indexing are suited for storing and querying high-dimensional vectors, meaning that they are well-equipped for tasks related to machine learning, recommendation systems, natural language processing, and more.